Journal: uffmm.org,

ISSN 2567-6458, July 24-25, 2019

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

CONTEXT

This is the next step in the python3 programming project. The overall context is still the python Co-Learning project.

SUBJECT

See part 7.

SZENARIO

Motivation

See part 7.

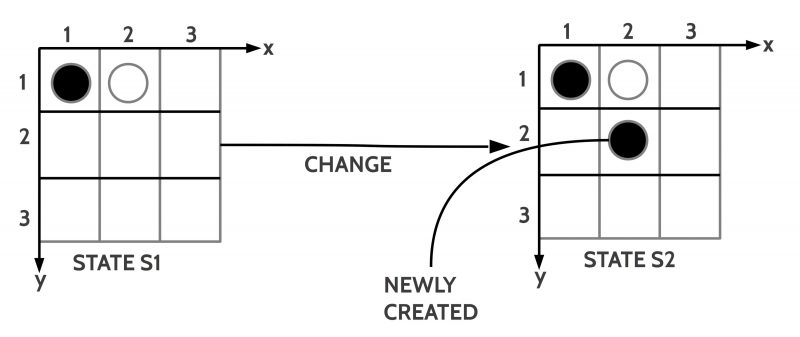

On this part 8 I focus on the dynamic part of the virtual world. Which kind of changes are possible and how they can be managed?

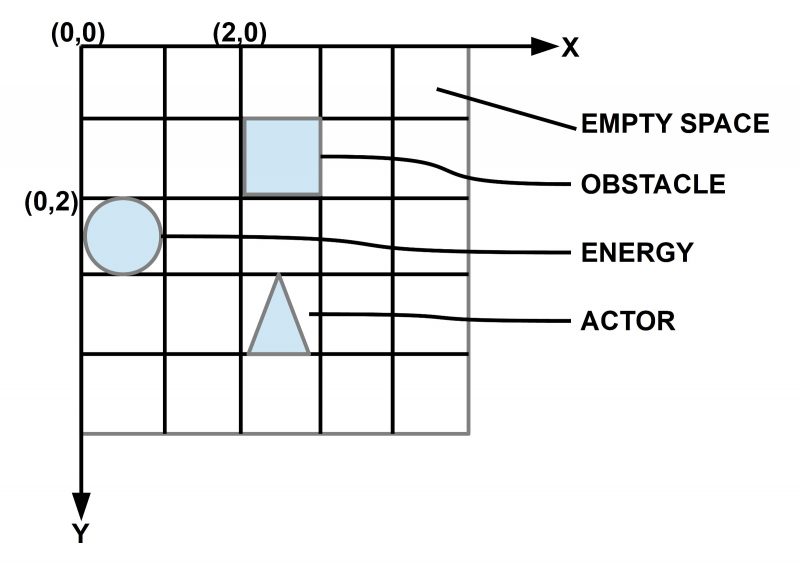

Virtual World (VW) – General Assumptions

See part 7.

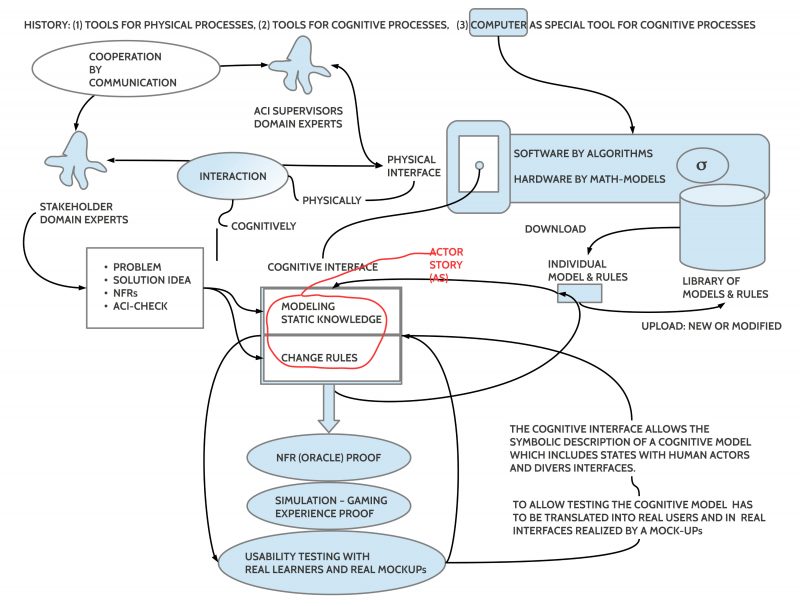

ACTOR STORY

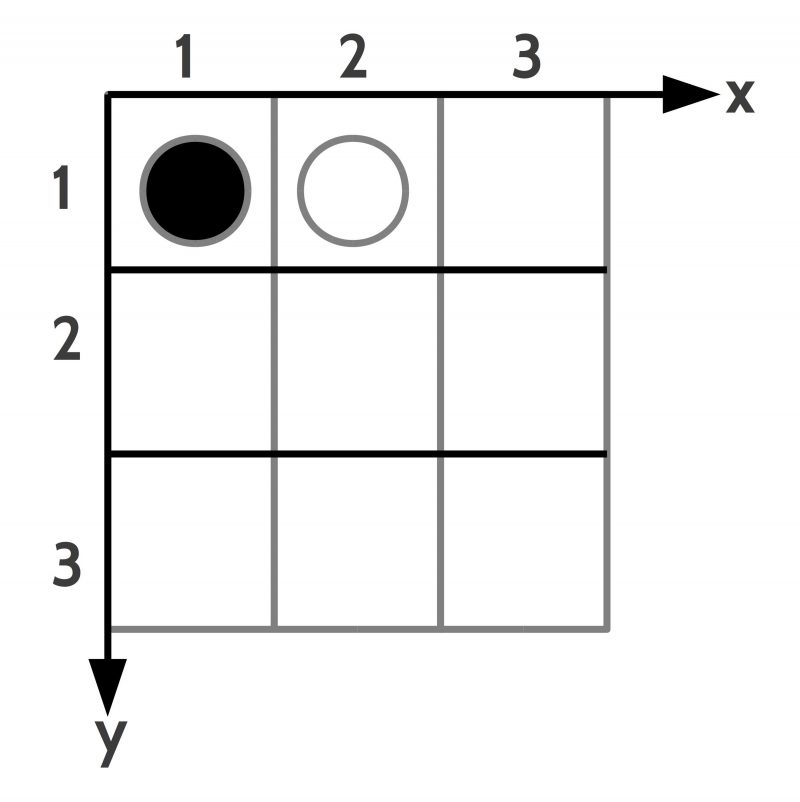

1. After completing a 2D virtual world with obstacles ‘O’, food objects ‘F’ and actors ‘A’, the general world cycling loop will be started. In this loop all possible changes will be managed. The original user has no active role during this cycling loop; he is now in the role of the administrator starting or stopping the cycling loop or interrupt to get some data.

2. The cycling loop can be viewed as structured in different phases.

a) In the first phase all general services will be managed, this is the management of the energy level of all objects: (i) food objects will be updated to grow and (ii) actor objects will be updated by reducing the energy by the standard depending from time. If energy level is below 0 then the avatar of the actor will be removed from the object list as well as from the grid.

b) In the second phase all offered actions will be collected. The only sources for actions are so far the actors. There can either be an (i) eat-action, if they dwell actually on a position with food, or (ii) they can make a move.

(i) In case of an eat-action the actor sends a message to the action buffer of the world: [ID of actor, position, eat-action]

(ii) In case of a move-action the actor sends a message to the action buffer of the world: [ID of actor, position, move-action, direction DIR in [0,7]].

3. The world function wf will randomly select each action message one after the other and process these. There are the following cases:

(a) In case of an eat-action it will be checked whether there is food at the position. If not nothing will happen, if yes then the energy level of the eating actor will be increased inside the actor and the amount of the food will be decreased. There is a correspondence between amount of food and consumed energy.

(b) In case of a move-action it will be checked whether there is a path in the selected direction to move one cell. If not, then nothing will happen, if Yes, then the move will happen and the energy level will be decreased by a certain amount due to moving.

3. After finishing all services the world clock WCLCK will be increased by one (Remark: in later versions it can happen that there exist actions whose duration needs more than 1 cycle to become finished).

IMPLEMENTATION

vw3.py (Main File)

gridHelper.py (Import module)

EXERCISES

One new aspect is the update of the food objects which ‘refresh’ their amount and with this amount their energy depending from the time. Here it is a simple time depending increment. In later versions one can differentiate between different foods, differen surounding conditions, etc.

def foodUpdate(olF,objL):

for i in range(len(olF)): #Cycle through all food objects

if olF[i][3][1]<objL[‘F’][1]: #Compare with standard maximum

olF[i][3][1]+objL[‘F’][2] # If lower then increment by standard

return olF

The other task during the object update is the management of the actor objects with regard to their energy. They are losing energy directly depending from the time and in case of movement. During the simulation actors can increase their energy again by eating, but as long this does not happen, their energy loss is continuously in every new cycle. This can lead to the extreme situation, where an actor has reached an energy zero state (<1). In this case the actor will be removed from the grid (replacing its old position ‘A by an empty space ‘_’). Simultaneously this actor shall be removed from the list of all actors olA.

def actorUpdate(olA,objL,mx):

for i in range(len(olA)): #Cycle through all actor objects

olA[i][3][1]=olA[i][3][1]-objL[‘A’][2] #Decrement the energy level by the standard

# Check whether an element is below 1 with its energy

iL=[y for y in range(len(olA)) if olA[y][3][1]<1] # Generate a list of all these actors with no energy

if iL != []: # If the list is not empty:

#Collect coordinates from actors in Grid

yxL=[[olA[y][x]] for y in range(len(olA)) for x in range(2) if olA[y][3][1]<1]

# Concentrate lists as (y,x) pairs

yxc=[[yxL[i][0], yxL[i+1][0]] for i in range(0,len(yxL),2)]

# Replace selected actors in the Grid by ‘_’

if yxc != []:

for i in range(len(yxc)):

y=yxc[i][0]

x=yxc[i][1]

mx[y][x]=’_’

# Delete actor from olA list

#Because pop() decreases the olA list, one has to start indexing from the ‘right end’ because then the decrement of the list

# keeps the other remaining indices valid!

for i in range(len(iL)-1,-1,-1): #A reverse order!

olA.pop(iL[i])

else:

return olA

DEMO

This demo shows that part of a run where the energy level of the actors — in this case all together, which is usually not the case — are changing from 200 to 0, which means they have reached the critical state.

olA in Update :

[[1, 1, ‘A’, [0, 200, 200, 100]], [1, 4, ‘A’, [1, 200, 200, 100]], [2, 4, ‘A’, [2, 200, 200, 100]], [4, 3, ‘A’, [3, 200, 200, 100]]]

iL before if

[]

iL is empty

In this situation is the list of actors which have reached zero energy (iL) still empty.

Updated actor objects:

[1, 1, ‘A’, [0, 200, 200, 100]]

[1, 4, ‘A’, [1, 200, 200, 100]]

[2, 4, ‘A’, [2, 200, 200, 100]]

[4, 3, ‘A’, [3, 200, 200, 100]]

[‘_’, ‘_’, ‘_’, ‘_’, ‘F’, ‘O’, ‘F’]

[‘O’, ‘A’, ‘_’, ‘F’, ‘A’, ‘O’, ‘F’]

[‘F’, ‘O’, ‘O’, ‘F’, ‘A’, ‘_’, ‘O’]

[‘_’, ‘F’, ‘_’, ‘F’, ‘O’, ‘F’, ‘F’]

[‘O’, ‘_’, ‘O’, ‘A’, ‘O’, ‘O’, ‘_’]

[‘F’, ‘_’, ‘F’, ‘_’, ‘O’, ‘_’, ‘O’]

[‘O’, ‘_’, ‘_’, ‘_’, ‘_’, ‘O’, ‘_’]

Updated food objects:

[0, 4, ‘F’, [0, 1000, 20]]

[0, 6, ‘F’, [1, 1000, 20]]

[1, 3, ‘F’, [2, 1000, 20]]

[1, 6, ‘F’, [3, 1000, 20]]

[2, 0, ‘F’, [4, 1000, 20]]

[2, 3, ‘F’, [5, 1000, 20]]

[3, 1, ‘F’, [6, 1000, 20]]

[3, 3, ‘F’, [7, 1000, 20]]

[3, 5, ‘F’, [8, 1000, 20]]

[3, 6, ‘F’, [9, 1000, 20]]

[5, 0, ‘F’, [10, 1000, 20]]

[5, 2, ‘F’, [11, 1000, 20]]

olA in Update :

[[1, 1, ‘A’, [0, 0, 200, 100]], [1, 4, ‘A’, [1, 0, 200, 100]], [2, 4, ‘A’, [2, 0, 200, 100]], [4, 3, ‘A’, [3, 0, 200, 100]]]

Now the energy levels reached 0.

iL before if

[0, 1, 2, 3]

# The list iL is now filled up with indices of olA which actors reached energy 0

IL inside if: [0, 1, 2, 3]

yxL inside if: [[1], [1], [1], [4], [2], [4], [4], [3]]

xyc inside :

[[1, 1], [1, 4], [2, 4], [4, 3]]

This last version of the yxc list shows the (y,x) coordinates of those actors which have energy below 1.

iL before pop :

[0, 1, 2, 3]

Then starts the pop() operation which takes those identified actors from the olA list. The removing of actors starts from the right side of the list because otherwise somewhere in the middle of the list the indices would no longer exist any more which have been valid in the full list.

pop : 3

pop : 2

pop : 1

pop : 0

Updated actor objects:

As one can see, the actors have been removed from grid.

[‘_’, ‘_’, ‘_’, ‘_’, ‘F’, ‘O’, ‘F’]

[‘O’, ‘_’, ‘_’, ‘F’, ‘_’, ‘O’, ‘F’]

[‘F’, ‘O’, ‘O’, ‘F’, ‘_’, ‘_’, ‘O’]

[‘_’, ‘F’, ‘_’, ‘F’, ‘O’, ‘F’, ‘F’]

[‘O’, ‘_’, ‘O’, ‘_’, ‘O’, ‘O’, ‘_’]

[‘F’, ‘_’, ‘F’, ‘_’, ‘O’, ‘_’, ‘O’]

[‘O’, ‘_’, ‘_’, ‘_’, ‘_’, ‘O’, ‘_’]

Updated food objects:

[0, 4, ‘F’, [0, 1000, 20]]

[0, 6, ‘F’, [1, 1000, 20]]

[1, 3, ‘F’, [2, 1000, 20]]

[1, 6, ‘F’, [3, 1000, 20]]

[2, 0, ‘F’, [4, 1000, 20]]

[2, 3, ‘F’, [5, 1000, 20]]

[3, 1, ‘F’, [6, 1000, 20]]

[3, 3, ‘F’, [7, 1000, 20]]

[3, 5, ‘F’, [8, 1000, 20]]

[3, 6, ‘F’, [9, 1000, 20]]

[5, 0, ‘F’, [10, 1000, 20]]

[5, 2, ‘F’, [11, 1000, 20]]

!!! MAIN: no more actors in the grid !!!

[‘_’, ‘_’, ‘_’, ‘_’, ‘F’, ‘O’, ‘F’]

[‘O’, ‘_’, ‘_’, ‘F’, ‘_’, ‘O’, ‘F’]

[‘F’, ‘O’, ‘O’, ‘F’, ‘_’, ‘_’, ‘O’]

[‘_’, ‘F’, ‘_’, ‘F’, ‘O’, ‘F’, ‘F’]

[‘O’, ‘_’, ‘O’, ‘_’, ‘O’, ‘O’, ‘_’]

[‘F’, ‘_’, ‘F’, ‘_’, ‘O’, ‘_’, ‘O’]

[‘O’, ‘_’, ‘_’, ‘_’, ‘_’, ‘O’, ‘_’]

CYCle : 6 ————————-

MAIN LOOP: STOP = ‘N’, CONTINUE != ‘N’