eJournal: uffmm.org,

ISSN 2567-6458, 20.April 2019

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

A first draft version …

CONTEXT

The context for this text is the whole block dedicated to the AAI (Actor-Actor Interaction) paradigm. The aim of this text is to give the big picture of all dimensions and components of this subject as it shows up during April 2019.

The first dimension introduced is the historical dimension, because this allows a first orientation in the course of events which lead to the actual situation. It starts with the early days of real computers in the thirties and forties of the 20 century.

The second dimension is the engineering dimension which describes the special view within which we are looking onto the overall topic of interactions between human persons and computers (or machines or technology or society). We are interested how to transform a given problem into a valuable solution in a methodological sound way called engineering.

The third dimension is the whole of society because engineering happens always as some process within a society. Society provides the resources which can be used and spends the preferences (values) what is understood as ‘valuable’, as ‘good’.

The fourth dimension is Philosophy as that kind of thinking which takes everything into account which can be thought and within thinking Philosophy clarifies conditions of thinking, possible tools of thinking and has to clarify when some symbolic expression becomes true.

HISTORY

In history we are looking back in the course of events. And this looking back is in a first step guided by the concepts of HCI (Human-Computer Interface) and HMI (Human-Machine Interaction).

It is an interesting phenomenon how the original focus of the interface between human persons and the early computers shifted to the more general picture of interaction because the computer as machine developed rapidly on account of the rapid development of the enabling hardware (HW) the enabling software (SW).

Within the general framework of hardware and software the so-called artificial intelligence (AI) developed first as a sub-topic on its own. Since the last 10 – 20 years it became in a way productive that it now seems to become a normal part of every kind of software. Software and smart software seem to be interchangeable. Thus the new wording of augmented or collective intelligence is emerging intending to bridge the possible gap between humans with their human intelligence and machine intelligence. There is some motivation from the side of society not to allow the impression that the smart (intelligent) machines will replace some day the humans. Instead one is propagating the vision of a new collective shape of intelligence where human and machine intelligence allows a symbiosis where each side gives hist best and receives a maximum in a win-win situation.

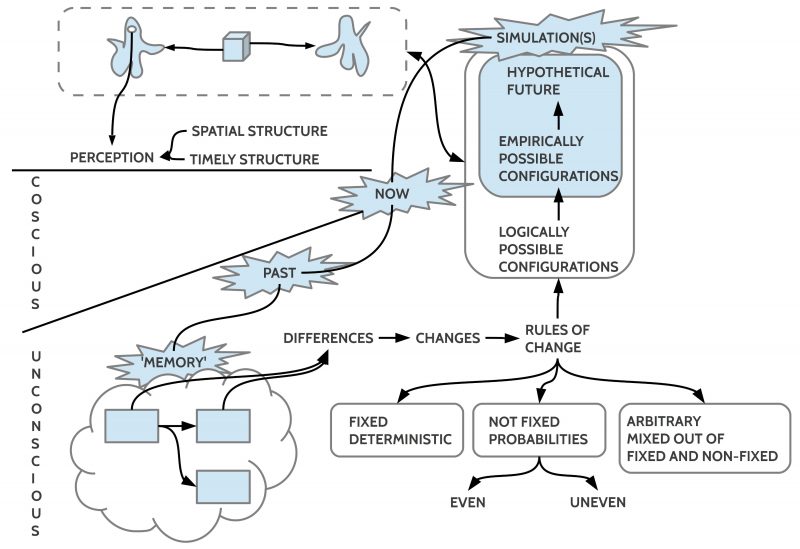

What is revealing about the actual situation is the fact that the mainstream is always talking about intelligence but not seriously about learning! Intelligence is by its roots a static concept representing some capabilities at a certain point of time, while learning is the more general dynamic concept that a system can change its behavior depending from actual external stimuli as well as internal states. And such a change includes real changes of some of its internal states. Intelligence does not communicate this dynamics! The most demanding aspect of learning is the need for preferences. Without preferences learning is impossible. Today machine learning is a very weak example of learning because the question of preferences is not a real topic there. One assumes that some reward is available, but one does not really investigate this topic. The rare research trying to do this job is stating that there is not the faintest idea around how a general continuous learning could happen. Human society is of no help for this problem while human societies have a clash of many, often opposite, values, and they have no commonly accepted view how to improve this situation.

ENGINEERING

Engineering is the art and the science to transform a given problem into a valuable and working solution. What is valuable decides the surrounding enabling society and this judgment can change during the course of time. Whether some solution is judged to be working can change during the course of time too but the criteria used for this judgment are more stable because of their adherence to concrete capabilities of technical solutions.

While engineering was and is always a kind of an art and needs such aspects like creativity, innovation, intuition etc. it is also and as far as possible a procedure driven by defined methods how to do things, and these methods are as far as possible backed up by scientific theories. The real engineer therefore synthesizes art, technology and science in a unique way which can not completely be learned in the schools.

In the past as well as in the present engineering has to happen in teams of many, often many thousands or even more, people which coordinate their brains by communication which enables in the individual brains some kind of understanding, of emerging world pictures, which in turn guide the perception, the decisions, and the concrete behavior of everybody. And these cognitive processes are embedded — in every individual team member — in mixtures of desires, emotions, as well as motivations, which can support the cognitive processes or obstruct them. Therefore an optimal result can only be reached if the communication serves all necessary cognitive processes and the interactions between the team members enable the necessary constructive desires, emotions, and motivations.

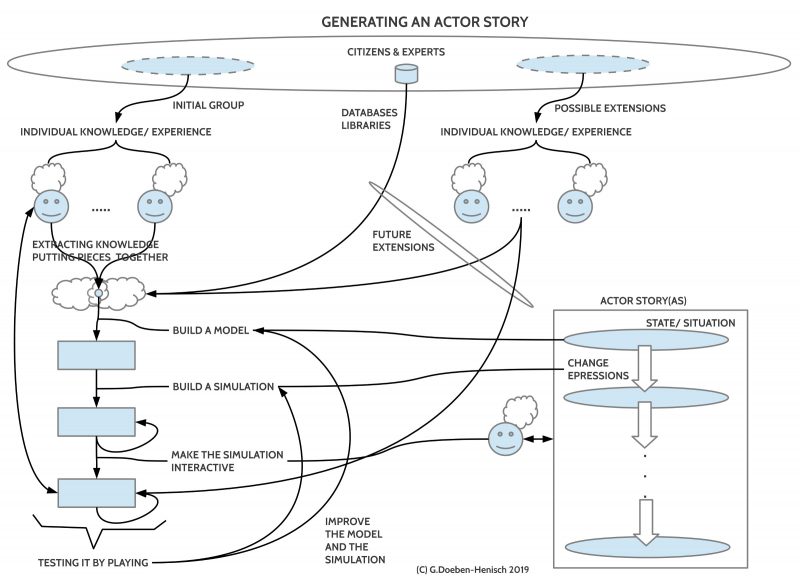

If an engineering process is done by a small group of dedicated experts — usually triggered by the given problem of an individual stakeholder — this can work well for many situations. It has the flavor of a so-called top-down approach. If the engineering deals with states of affairs where different kinds of people, citizens of some town etc. are affected by the results of such a process, the restriction to a small group of experts can become highly counterproductive. In those cases of a widespread interest it seems promising to include representatives of all the involved persons into the executing team to recognize their experiences and their kinds of preferences. This has to be done in a way which is understandable and appreciative, showing esteem for the others. This manner of extending the team of usual experts by situative experts can be termed bottom-up approach. In this usage of the term bottom-up this is not the opposite to top-down but is reflecting the extend in which members of a society are included insofar they are affected by the results of a process.

SOCIETY

Societies in the past and the present occur in a great variety of value systems, organizational structures, systems of power etc. Engineering processes within a society are depending completely on the available resources of a society and of its value systems.

The population dynamics, the needs and wishes of the people, the real territories, the climate, housing, traffic, and many different things are constantly producing demands to be solved if life shall be able and continue during the course of time.

The self-understanding and the self-management of societies is crucial for their ability to used engineering to improve life. This deserves communication and education to a sufficient extend, appropriate public rules of management, otherwise the necessary understanding and the freedom to act is lacking to use engineering in the right way.

PHILOSOPHY

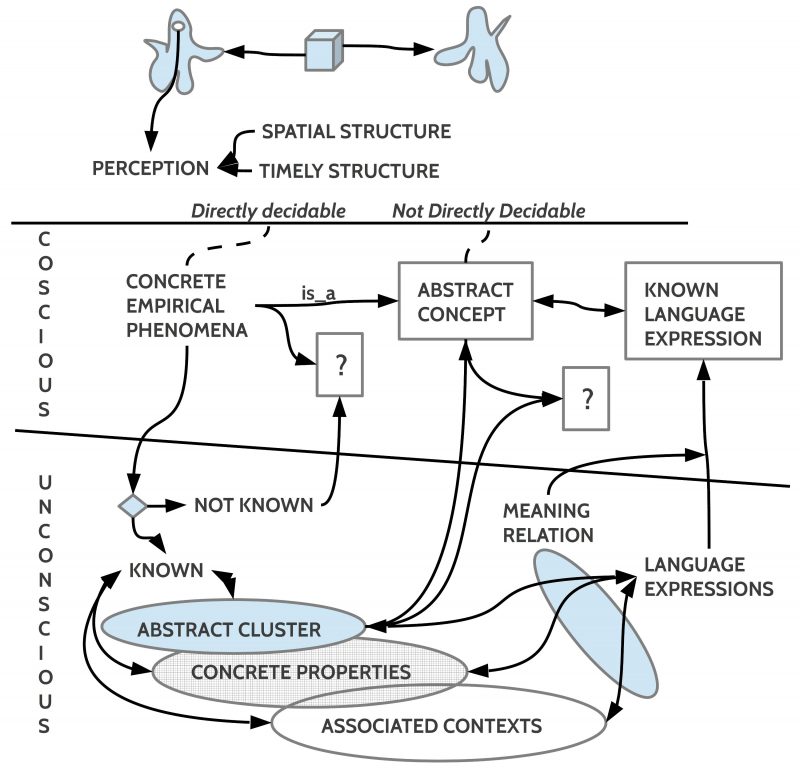

Without communication no common constructive process can happen. Communication happens according to many implicit rules compressed in the formula who when can speak how about what with whom etc. Communication enables cognitive processes of for instance understanding, explanations, lines of arguments. Especially important for survival is the ability to make true descriptions and the ability to decide whether a statement is true or not. Without this basic ability communication will break down, coordination will break down, life will break down.

The basic discipline to clarify the rules and conditions of true communication, of cognition in general, is called Philosophy. All the more modern empirical disciplines are specializations of the general scope of Philosophy and it is Philosophy which integrates all the special disciplines in one, coherent framework (this is the ideal; actually we are far from this ideal).

Thus to describe the process of engineering driven by different kinds of actors which are coordinating themselves by communication is primarily the task of philosophy with all their sub-disciplines.

Thus some of the topics of Philosophy are language, text, theory, verification of a theory, functions within theories as algorithms, computation in general, inferences of true statements from given theories, and the like.

In this text I apply Philosophy as far as necessary. Especially I am introducing a new process model extending the classical systems engineering approach by including the driving actors explicitly in the formal representation of the process. Learning machines are included as standard tools to improve human thinking and communication. You can name this Augmented Social Learning Systems (ASLS). Compared to the wording Augmented Intelligence (AI) (as used for instance by the IBM marketing) the ASLS concept stresses that the primary point of reference are the biological systems which created and create machine intelligence as a new tool to enhance biological intelligence as part of biological learning systems. Compared to the wording Collective Intelligence (CI) (as propagated by the MIT, especially by Thomas W.Malone and colleagues) the spirit of the CI concept seems to be similar, but perhaps only a weak similarity.