eJournal: uffmm.org,

ISSN 2567-6458, 09.Oct 2017 – April 9, 2022, 13:30 h

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

Remark April 2022

This post from Oct 2017 will be reviewed in the new conceptual framework of an Applied Empirical Theory [AET] with an additional Dynamic Format [DF]. For more details see HERE.

OVERVIEW

A short story telling You, (i) how we interface the intelligent machines (IM) part with the actor-actor interaction (AAI) part, (ii) a first working definition of intelligent machines (IM) in this text, and (iii) defining intelligence and how one can this measure.

IM WITHIN AAI

In this blog we see IM not isolated, as a stand alone endeavor, but as embedded in a discipline called actor-actor interaction (AAI)(later called DAAI := Distributed Actor Actor Interaction). AAI investigates complex tasks and looks how different kinds of actors are interacting in these contexts with technical systems. As far as the participating systems have been technical systems one speaks here of a system interface (SI) as that part of a technical system, which is interacting with the human actor. In the case of biological systems (mostly humans, but it could be animals as well), one speaks of the user interface (UI). In this text we generalize both cases by the general concept of an actor — biological and non-biological –, which has some actor interface (ActI), and this actor interface embraces all properties which are relevant for the interactions of the actor.

For the analysis of the behavior of actors in such task-environments one can distinguish two important concepts: the actor story (AS) describing the context as an observable process, as well as different actor models (AM). Actor models are special extensions of an actor story because an actor model describes the observable behavior of actors as a behavior function (BF) with a set of assumptions about possible internal states of the actors. The assumptions about possible internal states (IS) are either completely arbitrary or empirically motivated.

The embedding of IM within AAI can be realized through the concept of an actor model (UM) and the actor story (AS). Whatever is important for something which is called an intelligent machine application (IMA) can be defined as an actor model within an actor story. This embedding of IM within AAI offers many advantages.

This has to be explained with some more details.

An Intelligent Machine (IM) in an Actor Story

Let us assume that there exists a mathematical-graph representation of an actor story written as AS_{L_{ε}}. Such a graph has nodes which represent situations. Formally these are sets of properties, probably more fine-grained by subsets which represent different kinds of actors embedded in this situation as well as different kinds of non-actors.

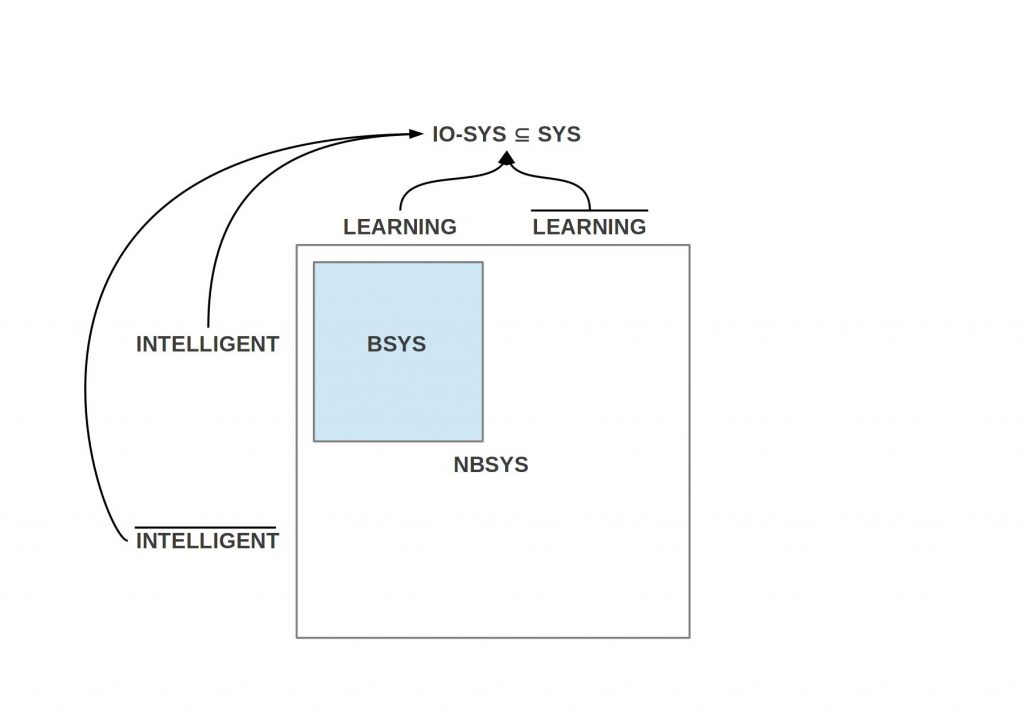

Actors can be classified (as introduced above) as either biological actors (BA) or non-biological actors (NBA). Both kinds of actors can — in another reading — be subsumed under the general term of input-output-systems (IO-SYS). An input-output system can be a learning system or non-learning. Another basic property is that of being intelligent or non-intelligent. Being a learning system and being an intelligent system is usually strongly connected, but this must not necessarily be so. Being a learning system can be associated with being non-intelligent and being intelligent can be connected with being non-learning.(cf. Figure 1)

While biological systems are always learning and intelligent, one can find non-biological systems of all types: non-learning and non-intelligent, non-intelligent and learning, non-learning and intelligent, and learning and intelligent.

Learning System

To classify a system as a learning system this requires the general ability to change the behavior of this system in time thus that there exists a time-span (t1,t2) after which the behavior as response to certain critical stimuli has changed compared to the time before. [1] From this requirement it follows, that a learning system is an input-output system with at least one internal state which can change. Thus we have the general assumption:

Def: Learning System (LS)

- LS(x) iff

- x=<I, O, IS, phi >

- φ : I x IS —> IS x O

- I := Input

- O := Output

- IS := Internal states

Some x is a learning system (LS) if it is a structure containing sets for input (I), Output (O), as well as internal states (IS). These sets are operated by a behavior function φ which maps inputs and actual internal states to output as well as back to internal states. The set of possible learning functions is infinite.

Intelligent System

The term ‘intelligent’ and ‘intelligence’ is until now not standardized. This means that everybody is using it at little bit arbitrarily.

In this text we take the basic idea of a scientific usage of the term ‘intelligence’ from experimental psychology, which has developed clearly defined operational concepts since the end of the 19. Century which have been proved as quite stable in their empirical applications. [2a,b,c]

The central idea of the psychological concept of the usage of the term ‘intelligence’ is to associate the usage of the term ‘intelligence’ with observable behavior of those actors, which shall be classified according defined methods of measurement.

In the case of experimental psychology the actors have been biological systems, mainly humans, in the first years of the research school children of certain ages. Because nobody did know what ‘intelligence’ means ‘as such’ one agreed to accept the observable behavior of children in certain task environments as ‘manifestations’ of a ‘presupposed unknown intelligence’. Thus the ability of children to solve defined tasks in a certain defined manner became a norm for what is called ‘intelligence’. Solving the tasks in a certain time with less than a certain amount of errors was used as a ‘baseline’ and all behavior deviating from the baseline was ‘better’ or ‘poorer’.

Thus the ‘content’ of the ‘meaning’ of the term ‘intelligence’ has been delegated to historical patterns of behavior which were common in a certain time-span in a certain geographical and cultural region.

While these behavior patterns can change during the course of time the general method of measurement is invariant.

In the time since then experimental psychology has modified and elaborated this first concept in some directions.

One direction is the modification of the kind of tasks which are used for the tests. With regard to the cultural context one has modified the content, thereby looking to find such kinds of task which seem to be ‘invariant’ with regard to the presupposed intelligence factor. This is an ongoing process.

The other direction is the focus on the actors as such. Because biological systems like humans change the development of their intelligence with age one has tried to find out ‘typical tasks for every age’. This too is an ongoing process.

This history of experimental psychology gives very interesting examples how one can approach the problem of the usage and the measurement of some X which we call ‘intelligence’.

In the context of an AAI-approach we have not only biological systems, but also non-biological systems. Thus most of the elaborated parameters of psychology for human actors are not general enough.

One possible strategy to generalize the intelligence-paradigm of experimental psychology could be to ‘free’ the selection of task sets from the narrow human cultures of the past and require only ‘clearly defined task sets with defined interfaces and defined contexts’. All these tasks sets can be arranged either in one super-set or in a parameterized field of sets. The sum of all these sets defines then a space of possible behavior and associated with this a space of possible measurable intelligence.

A task has then to be given as an actor story according to the AAI-paradigm. Such a specified actor story allows the formal definition of a complexity measure which can be used to measure the ‘amount of intelligence necessary to solve such a task’.

With such a more general and extendable approach to the measurement of observable intelligence one can compare all kinds of systems with each other. With such an approach one can further show objectively, where biological and non-biological systems differ generally, where they are similar, and to which extend they differ with regard to concrete circumstances.

Measuring Intelligence by Actor Stories

Presupposing actor stories (AS) (ideally formalized as mathematical graphs) on can define a first operational general measurement of intelligence.

Def: Task-Intelligence of a task τ (TInt(τ))

-

- Every defined task τ represents a graph g with one shortest path pmin(τ)= π_{min} from a start node to a goal node.

- Every such shortest path π_{min} has a certain number of nodes path-nodes(π_{min})=ν.

- The number of solved nodes (ν_{solved}) can become related against the total number of nodes ν as ν_{solved}/ν. We take TInt(τ)= ν_{solved}/ν. It follows that TInt(τ) is between 0 and 1: 0 ≤ TInt(τ)≤ 1.

- To every task a maximal duration Δ_{max} is attached; all nodes which are solved within this maximal duration time Δ_{max} are declared as ‘solved’, all the others as ‘un-solved’.

The usual case will require more than one task to be realized. Thus we introduce the concept of a task field (TF).

Def: Task-Field of type x (TF_{x})

Def: Task-Field Intelligence (TFInt)

A task-field TF of type x includes a finite set of individual tasks like TF_{x} = { τ{x.1}, τ{x.2}, … , τ{x.n} } with n ≥ 2. The sum of all individual task intelligence values TInt(τ{x.i}) has to be normalized to 1, i.e. (TInt(τ{x.1}) + TInt(τ{x.2}) + … + TInt(τ{x.n}))/ n (with 0 in the nominator not allowed). Thus the value of the intelligence of a task field of type x TFInt(TF_{x}) is again in the domain of [0,1].

Because the different tasks in a task field TF can be of different difficulty it should be possible to introduce some weighting for the individual task intelligence values. This should not change the general mechanism.

Def: Combined Task-Fields (TF)

In face of the huge variety of possible task fields in this world it can make sens to introduce more general layers by grouping task fields of different types together to larger combined fields, like TF_{x,…,z} = TF_{x} ∪ TF_{y} ∪ … ∪ TF_{z}. The task field intelligence TFInt of such combined task fields would be computed as before.

Def: Omega Task-Field at time t (TF_{ω}(t))

The most comprehensive assembly of such combinations shall here be called the Omega-Task-Field at time t TF_{ω}(t). This indicates the known maximum of intelligence measurements at that point of time.

Measurement Comments

With these assumptions the term intelligence will be restricted to clearly defined domains either to an individual task, to a task-field of type x, or to some grouped task-fields or being related to the actual omega task-field. In every such domain the intelligence value is in the realm of [0,1] or written as some value between 0 or 100%.

Independent of the type of an actor — biological or not — one can measure the intelligence of such an actor with the same domains of defined tasks. As a result one can easily compare all known actors with regard to such defined task domains.

Because the acting actors can be quite different by their input-output capabilities it follows that every actor has to organize some interface which enables him to use the defined task. There are no special restrictions to the format of such an interface, but there is one requirement which has to be observed strictly: the interface as such is not allowed to do any kind of computation beyond providing only the necessary input from the task domain or to provide the necessary output to the domain. Only then are the different tests able to reveal some difference between the different actors.

If the tests show differences between certain types of actors with regard to a certain task or a task-field then this is a chance to develop smart assistive interfaces which can help the actor in question to overcome his weakness compared to the other type of actor. Thus this kind of measuring intelligence can be a strong supporter for a better world in the future.

Another consequence of the differing intelligence values can be to look to the inner structure of an actor with weaker values and asking how one could improve his capabilities. This can be done e.g. by different kinds of training or by improving his system structures.

COMMENTS

[1] Sara J.Shettleworth, Biological Approaches to the Study of Learning, pp.185 – 219, in: N.J.Mackintosh (Ed.), Animal Learning and Cognition, Academic Press, San Diego, New York, London et.al., 1994

[2a] Ernest R.Hilgard, Rita L.Atkinson, Richard C.Atkinson, Introduction to Psychology, Harcourt Brace Jovanovic, Inc., Psychology, 7th ed., New York, San Diego, Chicago et.al, 1979

[2b] Detlef H.Rost, Intelligenz. Fakten und Mythen, Belz Verlag, Weinheim – Basel, 2009

[2c] Detlef H.Rost, Handbuch Intelligenz, Beltz Verlag, Weinheim – Basel, 2013