eJournal: uffmm.org

ISSN 2567-6458, 22.March – 23.March 2021

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

CONTEXT

This text is part of a philosophy of science analysis of the case of the oksimo software (oksimo.com). A specification of the oksimo software from an engineering point of view can be found in four consecutive posts dedicated to the HMI-Analysis for this software.

THE OKSIMO EVENT SPACE

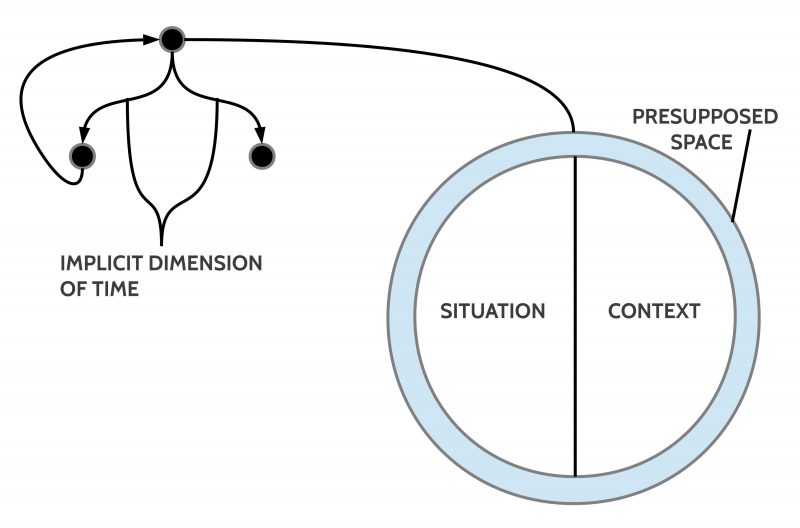

The characterization of the oksimo software paradigm starts with an informal characterization of the oksimo software event space.

EVENT SPACE

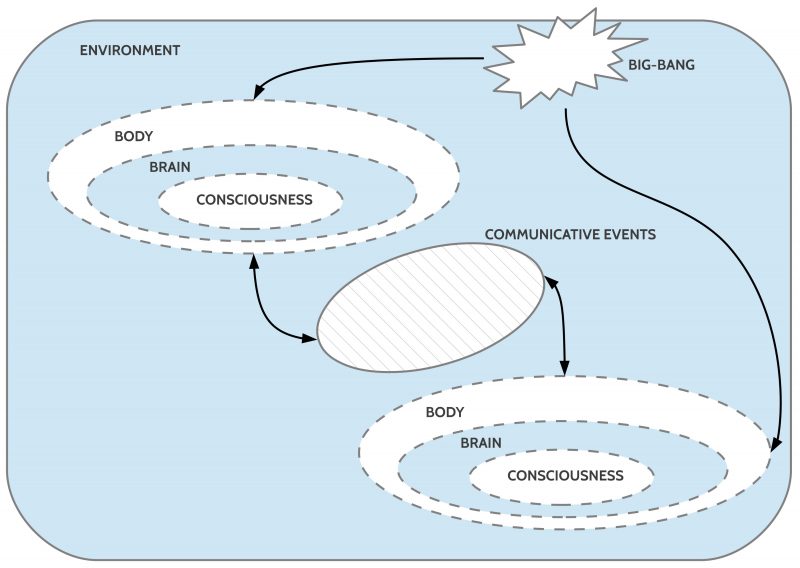

An event space is a space which can be filled up by observable events fitting to the species-specific internal processed environment representations [1], [2] here called internal environments [ENVint]. Thus the same external environment [ENV] can be represented in the presence of 10 different species in 10 different internal formats. Thus the expression ‘environment’ [ENV] is an abstract concept assuming an objective reality which is common to all living species but indeed it is processed by every species in a species-specific way.

In a human culture the usual point of view [ENVhum] is simultaneous with all the other points of views [ENVa] of all the other other species a.

In the ideal case it would be possible to translate all species-specific views ENVa into a symbolic representation which in turn could then be translated into the human point of view ENVhum. Then — in the ideal case — we could define the term environment [ENV] as the sum of all the different species-specific views translated in a human specific language: ∑ENVa = ENV.

But, because such a generalized view of the environment is until today not really possible by practical reasons we will use here for the beginning only expressions related to the human specific point of view [ENVhum] using as language an ordinary language [L], here the English language [LEN]. Every scientific language — e.g. the language of physics — is understood here as a sub language of the ordinary language.

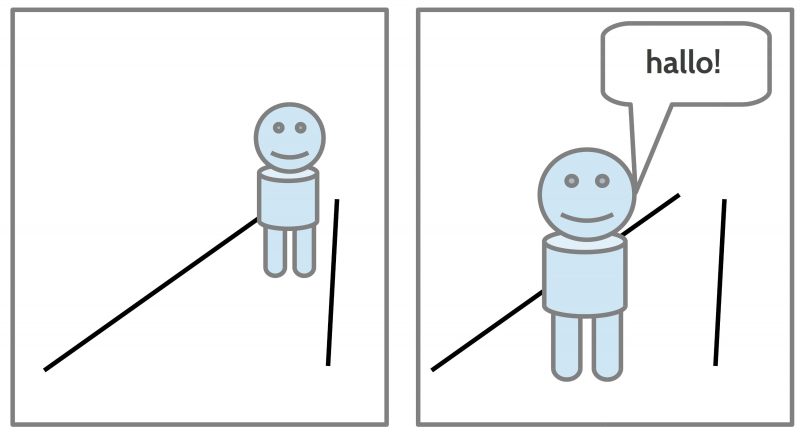

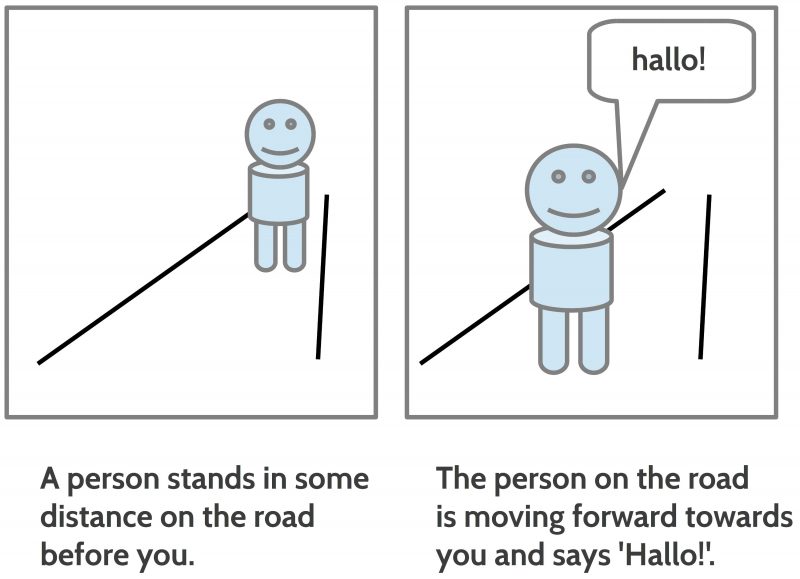

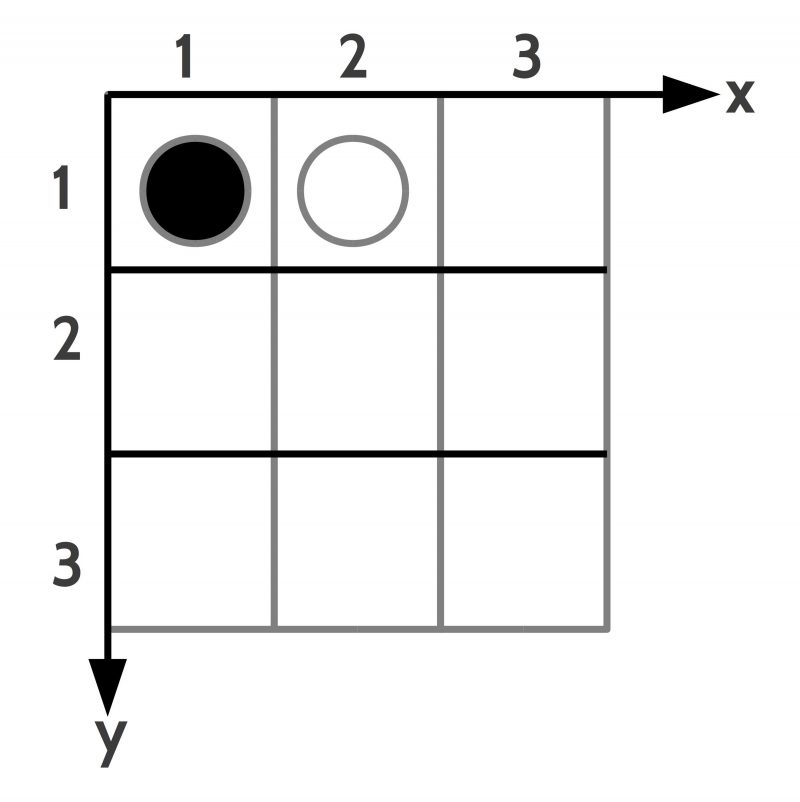

EVENTS

An event [EV] within an event space [ENVa] is a change [X] which can be observed at least from the members of that species [SP] a which is part of that environment ENV which enables a species-specific event space [ENVa]. Possibly there can be other actors around in the environment ENV from different species with their specific event space [ENVa] where the content of the different event spaces can possible overlap with regard to certain events.

A behavior is some observable movement of the body of some actor.

Changes X can be associated with certain behavior of certain actors or with non-actor conditions.

Thus when there are some human or non-human actors in an environment which are moving than they show a behavior which can eventually be associated with some observable changes.

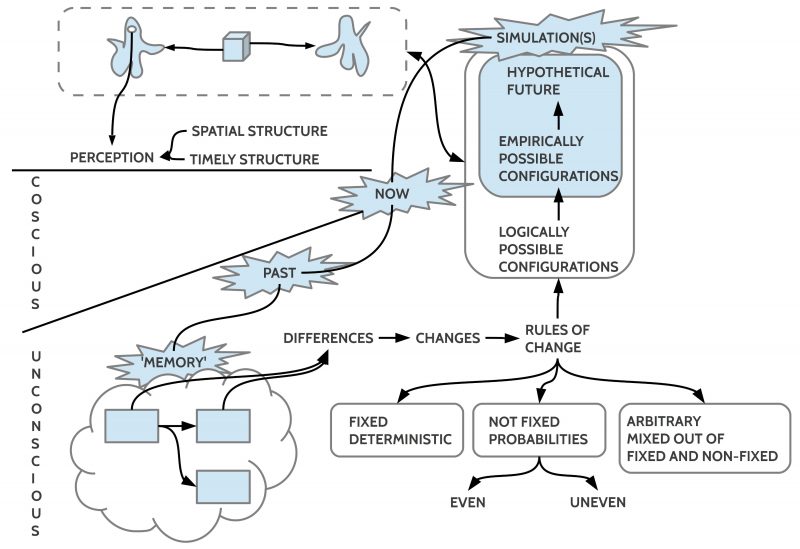

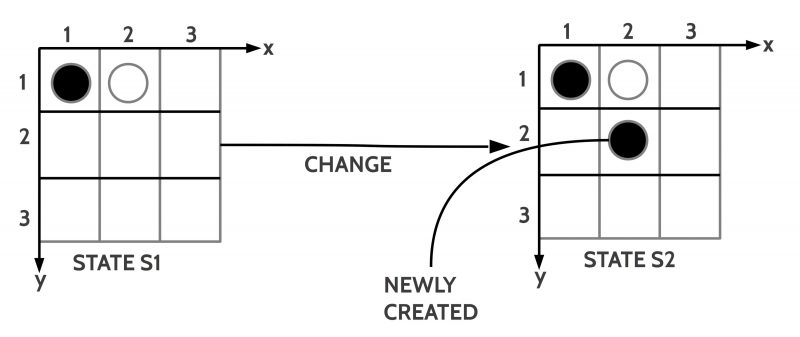

CHANGE

Besides being associated with observable events in the (species specific) environment the expression change is understood here as a kind of inner state in an actor which can compare past (stored) states Spast with an actual state Snow. If the past and actual state differ in some observable aspect Diff(Spast, Snow) ≠ 0, then there exists some change X, or Diff(Spast, Snow) = X. Usually the actor perceiving a change X will assume that this internal structure represents something external to the brain, but this must not necessarily be the case. It is of help if there are other human actors which confirm such a change perception although even this does not guarantee that there really is a change occurring. In the real world it is possible that a whole group of human actors can have a wrong interpretation.

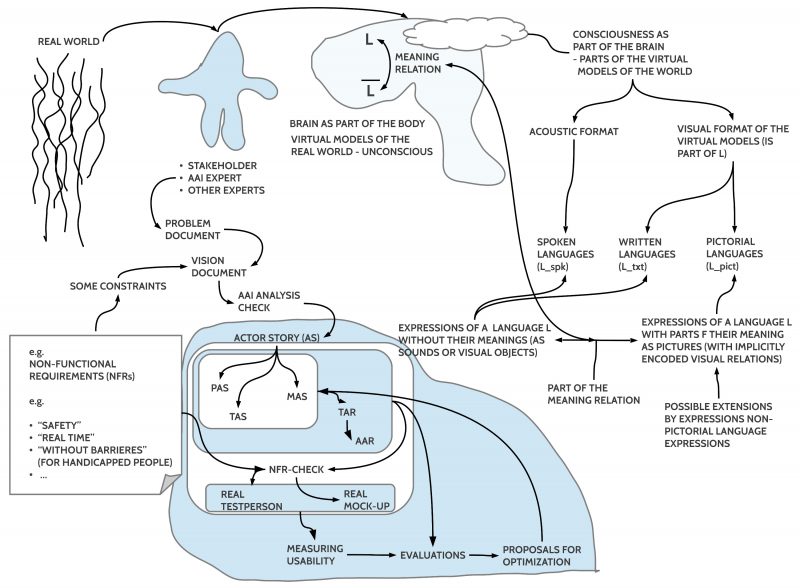

SYMBOLIC COMMUNICATION AND MEANING

It is a specialty of human actors — to some degree shared by other non-human biological actors — that they not only can built up internal representations ENVint of the reality external to the brain (the body itself or the world beyond the body) which are mostly unconscious, partially conscious, but also they can built up structures of expressions of an internal language Lint which can be mimicked to a high degree by expressions in the body-external environment ENV called expressions of an ordinary language L.

For this to work one has to assume that there exists an internal mapping from internal representations ENVint into the expressions of the internal language Lint as

meaning : ENVint <—> Lint.

and

speaking: Lint —> L

hearing: Lint <— L

Thus human actors can use their ordinary language L to activate internal encodings/ decodings with regard to the internal representations ENVint gained so far. This is called here symbolic communication.

NO SPEECH ACTS

To classify the occurrences of symbolic expressions during a symbolic communication is a nearly infinite undertaking. First impressions of the unsolvability of such a classification task can be gained if one reads the Philosophical Investigations of Ludwig Wittgenstein. [5] Later trials from different philosophers and scientists — e.g. under the heading of speech acts [4] — can not fully convince until today.

Instead of assuming here a complete scientific framework to classify occurrences of symbolic expressions of an ordinary language L we will only look to some examples and discuss these.

KINDS OF EXPRESSIONS

In what follows we will look to some selected examples of symbolic expressions and discuss these.

(Decidable) Concrete Expressions [(D)CE]

It is assumed here that two human actors A and B speaking the same ordinary language L are capable in a concrete situation S to describe objects OBJ and properties PROP of this situation in a way, that the hearer of a concrete expression E can decide whether the encoded meaning of that expression produced by the speaker is part of the observable situation S or not.

Thus, if A and B are together in a room with a wooden white table and there is a enough light for an observation then B can understand what A is saying if he states ‘There is a white wooden table.‘

To understand means here that both human actors are able to perceive the wooden white table as an object with properties, their brains will transform these external signals into internal neural signals forming an inner — not 1-to-1 — representation ENVint which can further be mapped by the learned meaning function into expressions of the inner language Lint and mapped further — by the speaker — into the external expressions of the learned ordinary language L and if the hearer can hear these spoken expressions he can translate the external expressions into the internal expressions which can be mapped onto the learned internal representations ENVint. In everyday situations there exists a high probability that the hearer then can respond with a spoken ‘Yes, that’s true’.

If this happens that some human actor is uttering a symbolic expression with regard to some observable property of the external environment and the other human actor does respond with a confirmation then such an utterance is called here a decidable symbolic expression of the ordinary language L. In this case one can classify such an expression as being true. Otherwise the expression is classified as being not true.

The case of being not true is not a simple case. Being not true can mean: (i) it is actually simply not given; (ii) it is conceivable that the meaning could become true if the external situation would be different; (iii) it is — in the light of the accessible knowledge — not conceivable that the meaning could become true in any situation; (iv) the meaning is to fuzzy to decided which case (i) – (iii) fits.

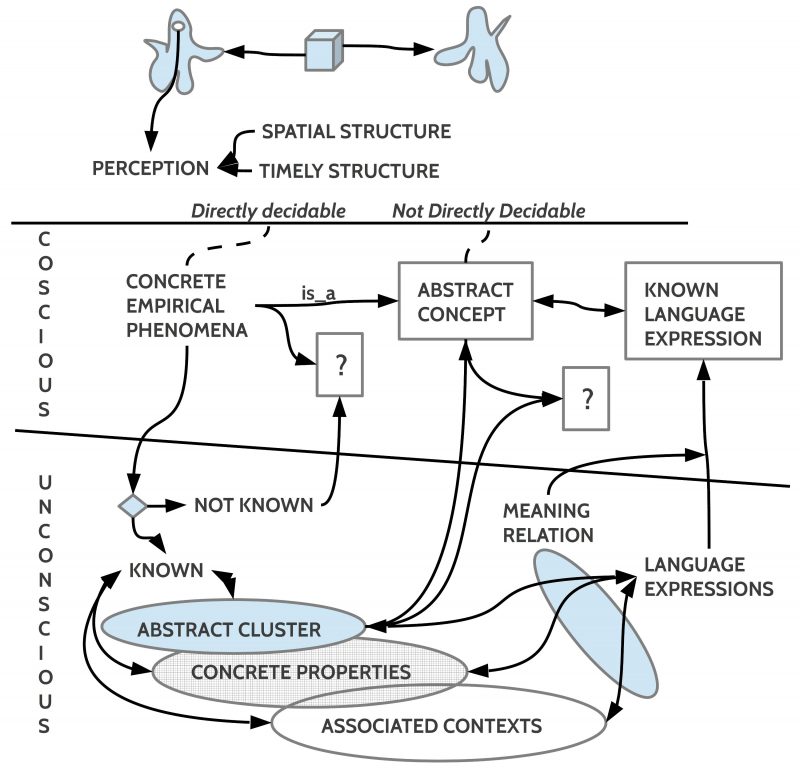

Cognitive Abstraction Processes

Before we talk about (Undecidable) Universal Expressions [(U)UE] it has to clarified that the internal mappings in a human actor are not only non-1-to-1 mappings but they are additionally automatic transformation processes of the kind that concrete perceptions of concrete environmental matters are automatically transformed by the brain into different kinds of states which are abstracted states using the concrete incoming signals as a trigger either to start a new abstracted state or to modify an existing abstracted state. Given such abstracted states there exist a multitude of other neural processes to process these abstracted states further embedded in numerous different relationships.

Thus the assumed internal language Lint does not map the neural processes which are processing the concrete events as such but the processed abstracted states! Language expressions as such can never be related directly to concrete material because this concrete material has no direct neural basis. What works — completely unconsciously — is that the brain can detect that an actual neural pattern nn has some similarity with a given abstracted structure NN and that then this concrete pattern nn is internally classified as an instance of NN. That means we can recognize that a perceived concrete matter nn is in ‘the light of’ our available (unconscious) knowledge an NN, but we cannot argue explicitly why. The decision has been processed automatically (unconsciously), but we can become aware of the result of this unconscious process.

Universal (Undecidable) Expressions [U(U)E]

Let us repeat the expression ‘There is a white wooden table‘ which has been used before as an example of a concrete decidable expression.

If one looks to the different parts of this expression then the partial expressions ‘white’, ‘wooden’, ‘table’ can be mapped by a learned meaning function φ into abstracted structures which are the result of internal processing. This means there can be countable infinite many concrete instances in the external environment ENV which can be understood as being white. The same holds for the expressions ‘wooden’ and ‘table’. Thus the expressions ‘white’, ‘wooden’, ‘table’ are all related to abstracted structures and therefor they have to be classified as universal expressions which as such are — strictly speaking — not decidable because they can be true in many concrete situations with different concrete matters. Or take it otherwise: an expression with a meaning function φ pointing to an abstracted structure is asymmetric: one expression can be related to many different perceivable concrete matters but certain members of a set of different perceived concrete matters can be related to one and the same abstracted structure on account of similarities based on properties embedded in the perceived concrete matter and being part of the abstracted structure.

In a cognitive point of view one can describe these matters such that the expression — like ‘table’ — which is pointing to a cognitive abstracted structure ‘T’ includes a set of properties Π and every concrete perceived structure ‘t’ (caused e.g. by some concrete matter in our environment which we would classify as a ‘table’) must have a ‘certain amount’ of properties Π* that one can say that the properties Π* are entailed in the set of properties Π of the abstracted structure T, thus Π* ⊆ Π. In what circumstances some speaker-hearer will say that something perceived concrete ‘is’ a table or ‘is not’ a table will depend from the learning history of this speaker-hearer. A child in the beginning of learning a language L can perhaps call something a ‘chair’ and the parents will correct the child and will perhaps say ‘no, this is table’.

Thus the expression ‘There is a white wooden table‘ as such is not true or false because it is not clear which set of concrete perceptions shall be derived from the possible internal meaning mappings, but if a concrete situation S is given with a concrete object with concrete properties then a speaker can ‘translate’ his/ her concrete perceptions with his learned meaning function φ into a composed expression using universal expressions. In such a situation where the speaker is part of the real situation S he/ she can recognize that the given situation is an instance of the abstracted structures encoded in the used expression. And recognizing this being an instance interprets the universal expression in a way that makes the universal expression fitting to a real given situation. And thereby the universal expression is transformed by interpretation with φ into a concrete decidable expression.

SUMMING UP

Thus the decisive moment of turning undecidable universal expressions U(U)E into decidable concrete expressions (D)CE is a human actor A behaving as a speaker-hearer of the used language L. Without a speaker-hearer every universal expressions is undefined and neither true nor false.

makedecidable : S x Ahum x E —> E x {true, false}

This reads as follows: If you want to know whether an expression E is concrete and as being concrete is ‘true’ or ‘false’ then ask a human actor Ahum which is part of a concrete situation S and the human actor shall answer whether the expression E can be interpreted such that E can be classified being either ‘true’ or ‘false’.

The function ‘makedecidable()’ is therefore the description (like a ‘recipe’) of a real process in the real world with real actors. The important factors in this description are the meaning functions inside the participating human actors. Although it is not possible to describe these meaning functions directly one can check their behavior and one can define an abstract model which describes the observable behavior of speaker-hearer of the language L. This is an empirical model and represents the typical case of behavioral models used in psychology, biology, sociology etc.

SOURCES

[1] Jakob Johann Freiherr von Uexküll (German: [ˈʏkskʏl])(1864 – 1944) https://en.wikipedia.org/wiki/Jakob_Johann_von_Uexk%C3%BCll

[2] Jakob von Uexküll, 1909, Umwelt und Innenwelt der Tiere. Berlin: J. Springer. (Download: https://ia802708.us.archive.org/13/items/umweltundinnenwe00uexk/umweltundinnenwe00uexk.pdf )

[3] Wikipedia EN, Speech acts: https://en.wikipedia.org/wiki/Speech_act

[4] Ludwig Josef Johann Wittgenstein ( 1889 – 1951): https://en.wikipedia.org/wiki/Ludwig_Wittgenstein

[5] Ludwig Wittgenstein, 1953: Philosophische Untersuchungen [PU], 1953: Philosophical Investigations [PI], translated by G. E. M. Anscombe /* For more details see: https://en.wikipedia.org/wiki/Philosophical_Investigations */