Integrating Engineering and the Human Factor (info@uffmm.org)

eJournal uffmm.org ISSN 2567-6458, February 27-March 16, 2021,

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

Last change: March 16, 2021 (minor corrections)

HISTORY

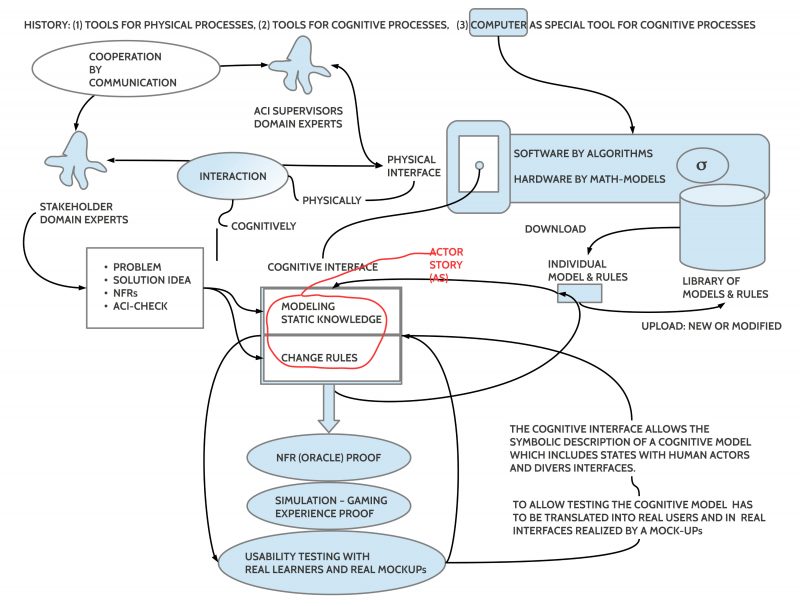

As described in the uffmm eJournal the wider context of this software project is an integrated engineering theory called Distributed Actor-Actor Interaction [DAAI] further extended to the Collective Man-Machine Intelligence [CM:MI] paradigm. This document is part of the Case Studies section.

HMI ANALYSIS, Part 2: Problem & Vision

Context

This text is preceded by the following texts:

- HMI Analysis for the CM:MI paradigm. Part 1 (Feb 25, 2021 at 12:08)

Introduction

Before one starts the HMI analysis some stakeholder — in our case are the users stakeholder as well as users in one role — have to present some given situation — classifiable as a ‘problem’ — to depart from and a vision as the envisioned goal to be realized.

Here we give a short description of the problem for the CM:MI paradigm and the vision, what should be gained.

Problem: Mankind on the Planet Earth

In this project the mankind on the planet earth is understood as the primary problem. ‘Mankind’ is seen here as the life form called homo sapiens. Based on the findings of biological evolution one can state that the homo sapiens has — besides many other wonderful capabilities — at least two extraordinary capabilities:

Outside to Inside

The whole body with the brain is able to convert continuously body-external events into internal, neural events. And the brain inside the body receives many events inside the body as external events too. Thus in the brain we can observe a mixup of body-external (outside 1) and body-internal events (outside 2), realized as set of billions of neural processes, highly interrelated. Most of these neural processes are unconscious, a small part is conscious. Nevertheless these unconscious and conscious events are neurally interrelated. This overall conversion from outside 1 and outside 2 into neural processes can be seen as a mapping. As we know today from biology, psychology and brain sciences this mapping is not a 1-1 mapping. The brain does all the time a kind of filtering — mostly unconscious — sorting out only those events which are judged by the brain to be important. Furthermore the brain is time-slicing all its sensory inputs, storing these time-slices (called ‘memories’), whereby these time-slices again are no 1-1 copies. The storing of time-sclices is a complex (unconscious) process with many kinds of operations like structuring, associating, abstracting, evaluating, and more. From this one can deduce that the content of an individual brain and the surrounding reality of the own body as well as the world outside the own body can be highly different. All kinds of perceived and stored neural events which can be or can become conscious are here called conscious cognitive substrates or cognitive objects.

Inside to Outside (to Inside)

Generally it is known that the homo sapiens can produce with its body events which have some impact on the world outside the body. One kind of such events is the production of all kinds of movements, including gestures, running, grasping with hands, painting, writing as well as sounds by his voice. What is of special interest here are forms of communications between different humans, and even more specially those communications enabled by the spoken sounds of a language as well as the written signs of a language. Spoken sounds as well as written signs are here called expressions associated with a known language. Expressions as such have no meaning (A non-speaker of a language L can hear or see expressions of the language L but he/she/x never will understand anything). But as everyday experience shows nearly every child starts very soon to learn which kinds of expressions belong to a language and with what kinds of shared experiences they can be associated. This learning is related to many complex neural processes which map expressions internally onto — conscious and unconscious — cognitive objects (including expressions!). This mapping builds up an internal meaning function from expressions into cognitive objects and vice versa. Because expressions have a dual face (being internal neural structures as well as being body-outside events by conversions from the inside to body-outside) it is possible that a homo sapiens can transmit its internal encoding of cognitive objects into expressions from his inside to the outside and thereby another homo sapiens can perceive the produced outside expression and can map this outside expression into an intern expression. As far as the meaning function of of the receiving homo sapiens is sufficiently similar to the meaning function of the sending homo sapiens there exists some probability that the receiving homo sapiens can activate from its memory cognitive objects which have some similarity with those of the sending homo sapiens.

Although we know today of different kinds of animals having some form of language, there is no species known which is with regard to language comparable to the homo sapiens. This explains to a large extend why the homo sapiens population was able to cooperate in a way, which not only can include many persons but also can stretch through long periods of time and can include highly complex cognitive objects and associated behavior.

Negative Complexity

In 2006 I introduced the term negative complexity in my writings to describe the fact that in the world surrounding an individual person there is an amount of language-encoded meaning available which is beyond the capacity of an individual brain to be processed. Thus whatever kind of experience or knowledge is accumulated in libraries and data bases, if the negative complexity is higher and higher than this knowledge can no longer help individual persons, whole groups, whole populations in a constructive usage of all this. What happens is that the intended well structured ‘sound’ of knowledge is turned into a noisy environment which crashes all kinds of intended structures into nothing or badly deformed somethings.

Entangled Humans

From Quantum Mechanics we know the idea of entangled states. But we must not dig into quantum mechanics to find other phenomena which manifest entangled states. Look around in your everyday world. There exist many occasions where a human person is acting in a situation, but the bodily separateness is a fake. While sitting before a laptop in a room the person is communicating within an online session with other persons. And depending from the social role and the membership in some social institution and being part of some project this person will talk, perceive, feel, decide etc. with regard to the known rules of these social environments which are represented as cognitive objects in its brain. Thus by knowledge, by cognition, the individual person is in its situation completely entangled with other persons which know from these roles and rules and following thereby in their behavior these rules too. Sitting with the body in a certain physical location somewhere on the planet does not matter in this moment. The primary reality is this cognitive space in the brains of the participating persons.

If you continue looking around in your everyday world you will probably detect that the everyday world is full of different kinds of cognitively induced entangled states of persons. These internalized structures are functioning like protocols, like scripts, like rules in a game, telling everybody what is expected from him/her/x, and to that extend, that people adhere to such internalized protocols, the daily life has some structure, has some stability, enables planning of behavior where cooperation between different persons is necessary. In a cognitively enabled entangled state the individual person becomes a member of something greater, becoming a super person. Entangled persons can do things which usually are not possible as long you are working as a pure individual person.[1]

Entangled Humans and Negative Complexity

Although entangled human persons can principally enable more complex events, structures, processes, engineering, cultural work than single persons, human entanglement is still limited by the brain capacities as well as by the limits of normal communication. Increasing the amount of meaning relevant artifacts or increasing the velocity of communication events makes things even more worse. There are objective limits for human processing, which can run into negative complexity.

Future is not Waiting

The term ‘future‘ is cognitively empty: there exists nowhere an object which can be called ‘future’. What we have is some local actual presence (the Now), which the body is turning into internal representations of some kind (becoming the Past), but something like a future does not exist, nowhere. Our knowledge about the future is radically zero.

Nevertheless, because our bodies are part of a physical world (planet, solar system, …) and our entangled scientific work has identified some regularities of this physical world which can be bused for some predictions what could happen with some probability as assumed states where our clocks are showing a different time stamp. But because there are many processes running in parallel, composed of billions of parameters which can be tuned in many directions, a really good forecast is not simple and depends from so many presuppositions.

Since the appearance of homo sapiens some hundred thousands years ago in Africa the homo sapiens became a game changer which makes all computations nearly impossible. Not in the beginning of the appearance of the homo sapiens, but in the course of time homo sapiens enlarged its number, improved its skills in more and more areas, and meanwhile we know, that homo sapiens indeed has started to crash more and more the conditions of its own life. And principally thinking points out, that homo sapiens could even crash more than only planet earth. Every exemplar of a homo sapiens has a built-in freedom which allows every time to decide to behave in a different way (although in everyday life we are mostly following some protocols). And this built-in freedom is guided by actual knowledge, by emotions, and by available resources. The same child can become a great musician, a great mathematician, a philosopher, a great political leader, an engineer, … but giving the child no resources, depriving it from important social contexts, giving it the wrong knowledge, it can not manifest its freedom in full richness. As human population we need the best out of all children.

Because the processing of the planet, the solar system etc. is going on, we are in need of good forecasts of possible futures, beyond our classical concepts of sharing knowledge. This is where our vision enters.

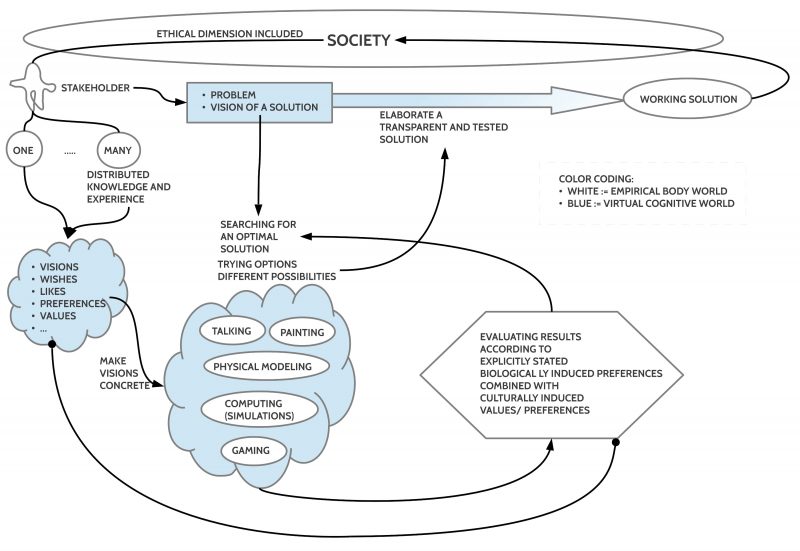

VISION: DEVELOPING TOGETHER POSSIBLE FUTURES

To find possible and reliable shapes of possible futures we have to exploit all experiences, all knowledge, all ideas, all kinds of creativity by using maximal diversity. Because present knowledge can be false — as history tells us –, we should not rule out all those ideas, which seem to be too crazy at a first glance. Real innovations are always different to what we are used to at that time. Thus the following text is a first rough outline of the vision:

- Find a format

- which allows any kinds of people

- for any kind of given problem

- with at least one vision of a possible improvement

- together

- to search and to find a path leading from the given problem (Now) to the envisioned improved state (future).

- For all needed communication any kind of everyday language should be enough.

- As needed this everyday language should be extendable with special expressions.

- These considerations about possible paths into the wanted envisioned future state should continuously be supported by appropriate automatic simulations of such a path.

- These simulations should include automatic evaluations based on the given envisioned state.

- As far as possible adaptive algorithms should be available to support the search, finding and identification of the best cases (referenced by the visions) within human planning.

REFERENCES or COMMENTS

[1] One of the most common entangled state in daily life is the usage of normal language! A normal language L works only because the rules of usage of this language L are shared by all speaker-hearer of this language, and these rules are explicit cognitive structures (not necessarily conscious, mostly unconscious!).

Continuation

Yes, it will happen 🙂 Here.