This text is part of the text “Rebooting Humanity”

(The German Version can be found HERE)

Author No. 1 (Gerd Doeben-Henisch)

Contact: info@uffmm.org

(Start: June 22, 2024, Last change: June 22, 2024)

Starting Point

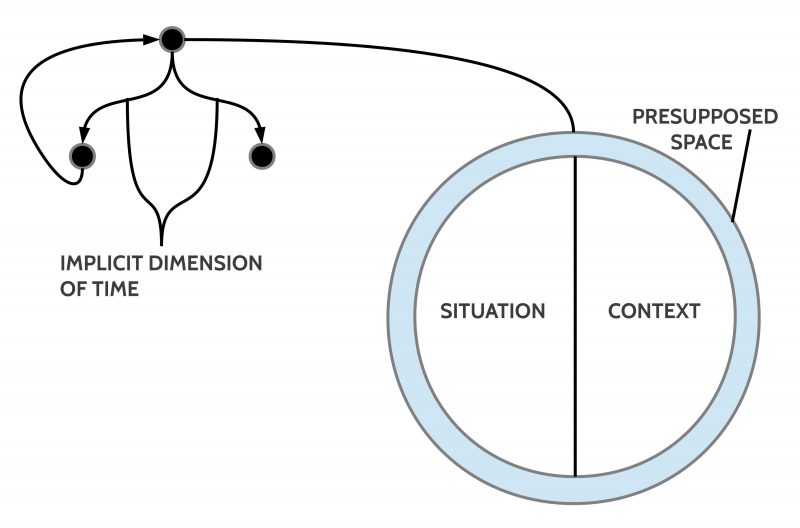

The main theme of this section is ‘collective human intelligence (CHI)’. However, it does not occur in isolation, detached from everything else. Rather, life on Planet Earth creates a complex network of processes which — upon closer examination — reveal structures that are consistent across all forms of life in their basic parameters, yet differ in parts. It must also be considered that these basic structures, in their process form, always intertwine with other processes, interacting and influencing each other. Therefore, a description of these basic structures will initially be rather sketchy here, as the enormous variety of details can otherwise lead one quickly into the ‘thicket of particulars’.

Important Factors

Basic Life-Pattern

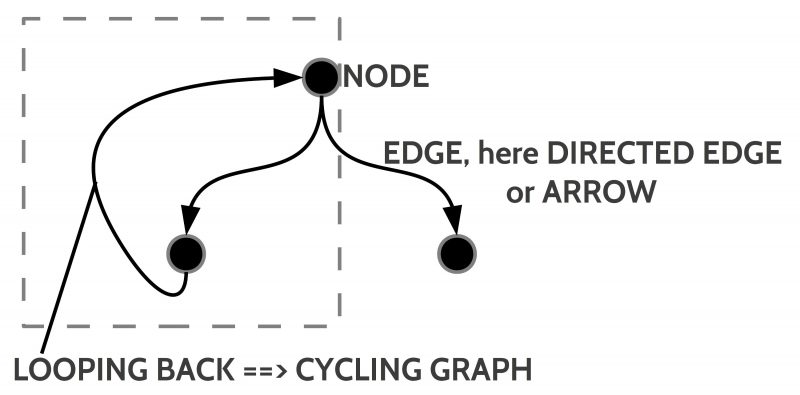

Starting from the individual cell up to the most powerful cell galaxies [1] that life has produced so far, every identifiable form of life exhibits a ‘basic pattern’ of the life process that threads like a ‘red thread’ through all forms of life: as long as there is more than a single life system (a ‘population’), there exists throughout the entire lifespan the basic cycle (i) reproduction of Generation 1 – birth of Generation 2 – growth of Generation 2 – onset of behavior of Generation 2 accompanied by learning processes – reproduction of Generation 2 – ….

Genetic Determinism

This basic pattern, in the phases of ‘reproduction’ and ‘birth’, is largely ‘genetically determined’. [2] The growth process itself—the development of the cell galaxy—is also fundamentally strongly genetically determined, but increasingly factors from the environment of the growth process come into play, which can modify the growth process to varying degrees. Thus, the outcomes of reproduction and growth can vary more or less significantly.

Learning

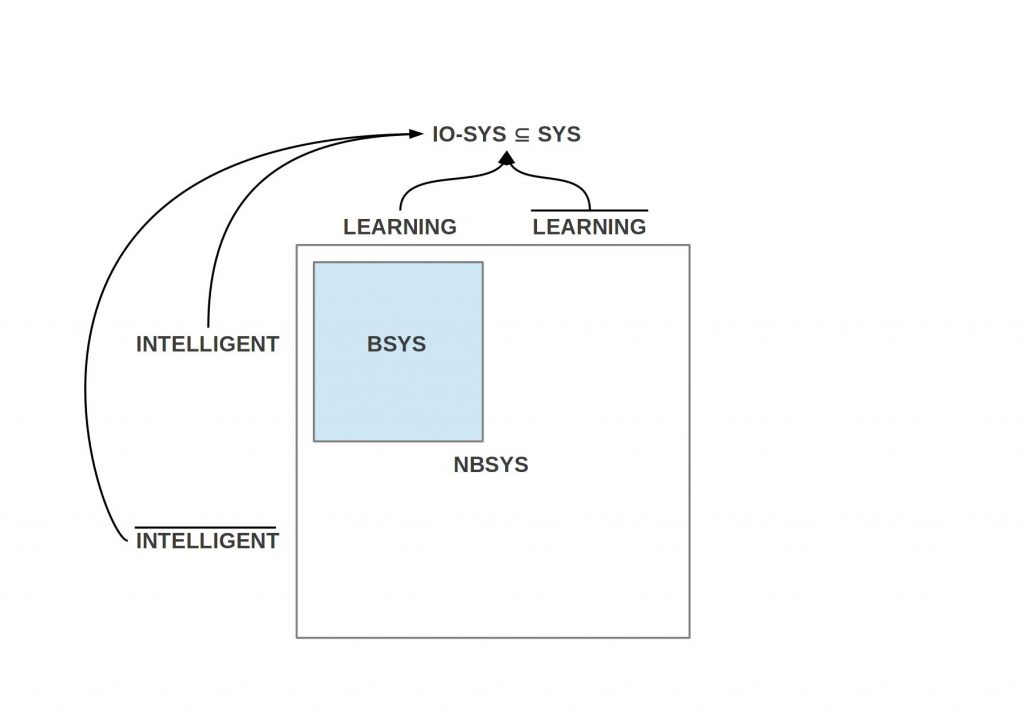

As growth transforms the cell galaxy into a ‘mature’ state, the entire system can enable different ‘functions’ that increasingly facilitate ‘learning’.

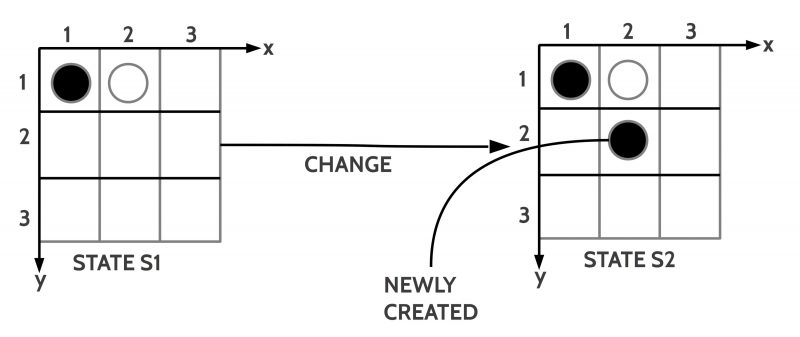

A minimal concept of learning related to acting systems identifies the ‘learning of a system’ by the fact that the ‘behavior’ of a system changes in relation to a specific environmental stimulus over a longer period of time, and this change is ‘more than random’. [3]

‘Learning’ ranges on a scale from ‘relatively determined’ to ‘largely open’. ‘Open’ here means that the control by genetic presets decreases and the system can have individually different experiences within a spectrum of possible actions.

Experiences

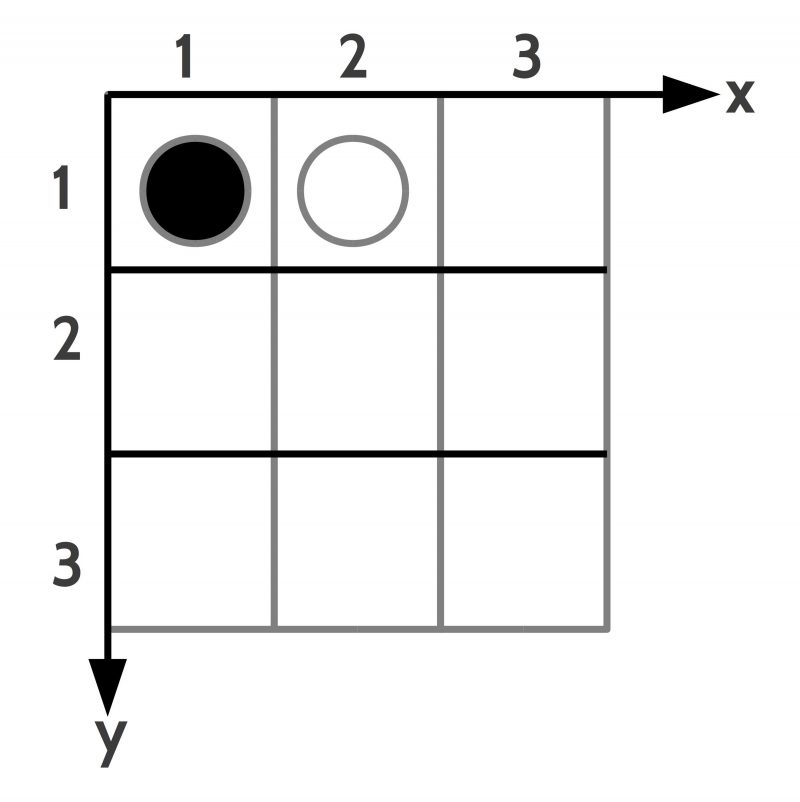

Experiences gain their ‘format’ within the following coordinates:

(i) (sensory) perception,

(ii) abstractions of sensory perceptions which are generated internally and which

(iii) are retrievable inside the system. The totality of such conditionally retrievable (recallable) abstractions is also called memory content. [4]

(iv) the possibility of arbitrary abstractions from abstractions, the

(v) storage of sequential events as well as

(vi) abstractions of sequentially occurring events, and the

(vii) free combination of abstractions into new integrated units.

Additionally,

(viii) the ‘perception of internal bodily events’ (also called ‘proprioceptive’ perception) [5], which can ‘link (associate)’ with all other perceptions and abstractions. [6] It is also important to note that it is a characteristic of perception that

(ix) events usually never occur in isolation but appear as ‘part of a spatial structure’. Although ‘subspaces can be distinguished (visually, acoustically, tactilely, etc.), these subspaces can be integrated into a ‘total space’ that has the format of a ‘three-dimensional body space’, with one’s own body as part of it. ‘In thought’, we can consider individual objects ‘by themselves’, detached from a body space, but as soon as we turn to the ‘sensual space of experience’, the three-dimensional spatial structure becomes active.

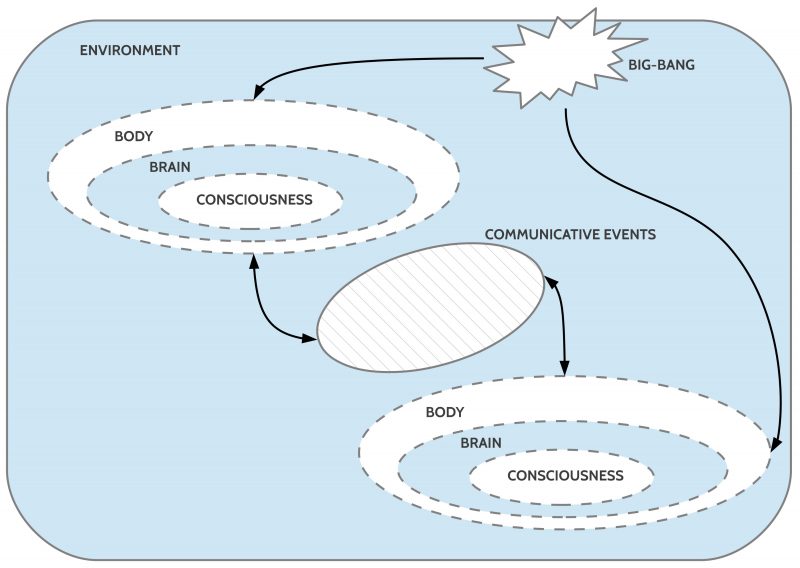

Individual – Collective

In the individual experience of everyday situations, this ‘inner world of experience’ largely forms in a multitude of ways, largely unconsciously.

However, as soon as ‘human systems’—in short, people—are not alone in a situation but together with others, these people can perceive and remember the same environment ‘similarly’ or ‘differently’. In their individual experiences, different elements can combine into specific patterns.

Coordination of Behavior

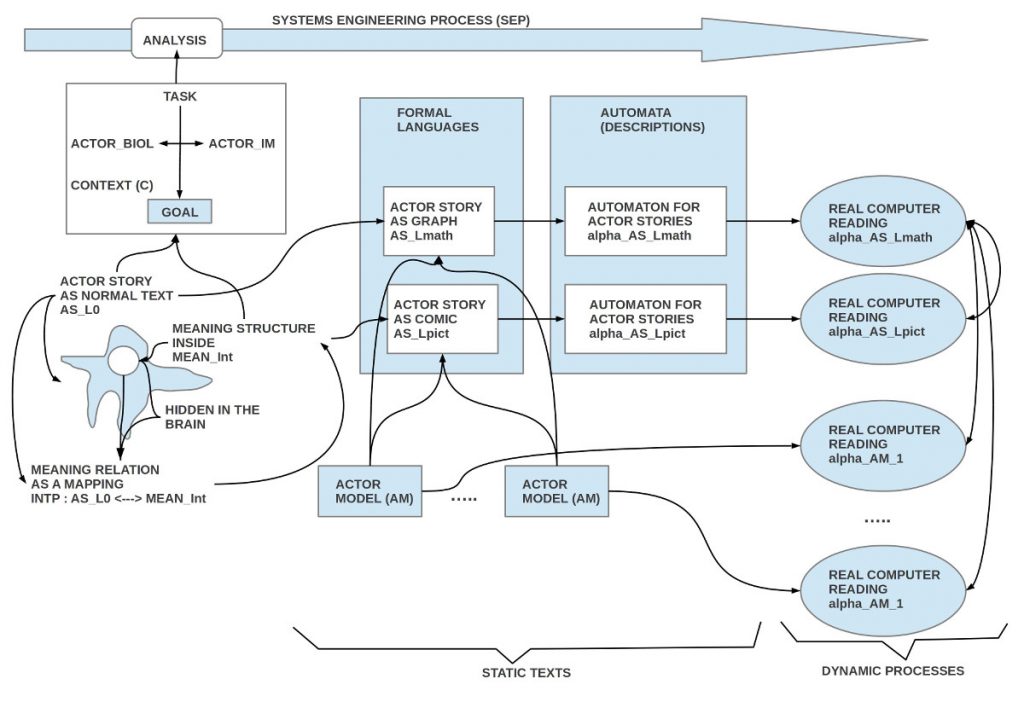

It becomes interesting when different people try to coordinate their behavior, even if it is just to make ‘contact’ with each other. And, although this can also be achieved in various ways without explicit symbolic language [7], sophisticated, complex collective action involving many participants over long periods with demanding tasks, according to current knowledge, is only possible with the inclusion of symbolic language.

The ability of humans to use languages seems to be fundamentally genetically conditioned. [8] ‘How’ language is used, ‘when’, ‘with which memory contents language is linked’, is not genetically conditioned. People must learn this ‘from the particular situation’ both individually and collectively, in coordination with others, since language only makes sense as a ‘common means of communication’ that should enable sophisticated coordination.

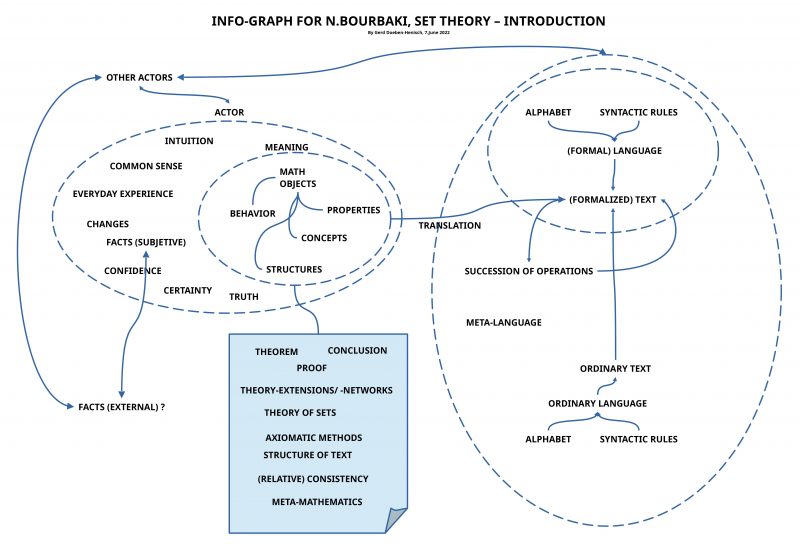

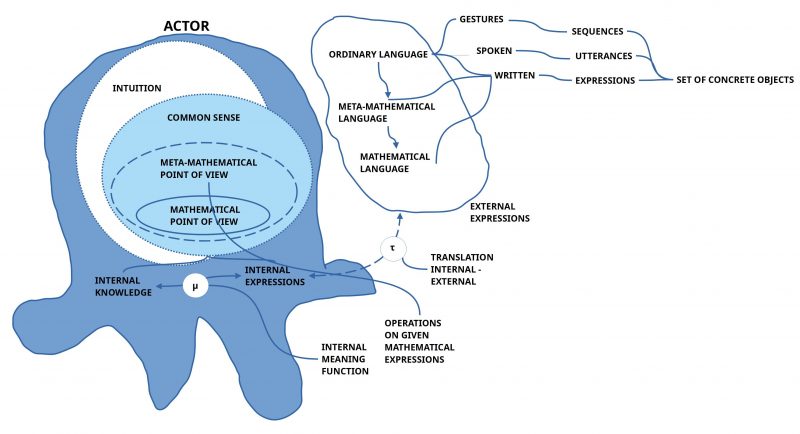

Linguistic Meaning

The system of sounds (and later symbols) used in a language is ultimately decided by those who initially use the language together. [9] Given the fact that there are many thousands of languages [10], one can conclude that there is considerable freedom in the specific design of a language. [11]

This ‘freedom in the specific design’ is most evident in the association between language elements and potential meanings. The same ‘object’, ‘event’, or ‘fact’ can be expressed completely differently by people using different languages. [12] And this ‘difference’ refers not only to the naming of individual properties or objects but occurs within a ‘larger context’ of ‘perspectives’ on how the everyday world is perceived, classified, and enacted within a specific language community. These differences also occur ‘within a language community’ in the form of ‘milieus’/’social strata’, where the ‘practice of life’ differs.

The most important aspect of the relationship between the language system (sounds, symbols, the arrangement of sounds and symbols) and possible ‘meaning content’ is that any type of ‘content’ is located within the person (inside the ‘system’).[13]

As the brief sketch above on the space of experience suggests, the elements that can constitute an experiential space are so layered and dynamic that an assignment between language elements and elements of experience is fundamentally incomplete. Additionally, two different systems (people) can only agree on elements of experience as ‘linguistic meaning’ if they have ‘common reference points’ in their individual experiential spaces. Due to the structure of the system, there exist only the following ‘types of reference points’:

(i) There are sensory perceptions of circumstances in a commonly shared environment that can be perceived ‘sufficiently’ similarly by all participants (and are automatically (unconsciously!) transformed into more abstract units in the experiential space).

(ii) There are internal body perceptions that normally occur similarly in each person due to a genetically determined structural similarity.[14]

(iii) There are internal perceptions of internal processes that also normally occur similarly in each person due to a genetically determined structural similarity. [15]

The more ‘similar’ such internal structures are and the more ‘constant’ they occur, the greater the likelihood that different language participants can form ‘internal working hypotheses’ about what the other means when using certain linguistic expressions. The more the content of experience deviates from types (i) – (iii), the more difficult or impossible it becomes to reach an agreement.

The question of ‘true statements’ and ‘verifiable predictions’ is a specific subset of the problem of meaning, which is treated separately.

Complex Language Forms

Even the assignment and determination of meaning in relatively ‘simple’ linguistic expressions is not straightforward, and it becomes quickly ‘blurred’ and ‘vague’ in ‘more complex language forms’. The discussions and research on this topic are incredibly extensive.[16]

I would like to briefly remind you of the example of Ludwig Wittgenstein, who first experimentally played through the very simple meaning concept of modern formal logic in his early work, ‘Tractatus Logico-Philosophicus’ (1921), but then, many years later (1936 – 1946), reexamined the problem of meaning using everyday language and many concrete examples. He discovered — not surprisingly — that ‘normal language’ functions differently and is significantly more complex than the simple meaning model of formal logic assumed. [17] What Wittgenstein discovered in his ‘everyday’ analyses was so multi-layered and complex that he found himself unable to transform it into a systematic presentation.[18]

Generally, it can be said that to this day there is not even a rudimentary comprehensive and accurate ‘theory of the meaning of normal language’. [19]

The emergence and proliferation of ‘generative artificial intelligence’ (Generative AI) in recent years [20] may offer an unexpected opportunity to revisit this problem entirely anew. Here, modern engineering demonstrates that simple algorithms, which possess no inherent meaning knowledge of their own, are capable of producing linguistic output by using only language material in linguistic interaction with humans. [21] This output is substantial, structured, and arranged in such a way that humans perceive it as if it were generated by a human, which is ultimately true. [22] What a person admires in this linguistic output is essentially ‘himself’, though not in his individual language performances, which are often worse than those generated by the algorithms. What the individual user encounters in such generated texts is essentially the ‘collective language knowledge’ of millions of people, which would not be accessible to us without these algorithms in this extracted form.

These generative algorithms [23] can be compared to the invention of the microscope, the telescope, or modern mathematics: all these inventions have enabled humans to recognize facts and structures that would have remained invisible without these tools. The true extent of collective linguistic performances would remain ‘invisible’ without modern technology, simply because humans without these technologies could not comprehend the scope and scale, along with all the details.

Preliminary Interim Result

The considerations so far only give a first outline of what collective intelligence can be or is.

[1] Reminder: If we assume that the number of stars in our home galaxy, the Milky Way, is estimated at 100 – 400 billion stars and we take 200 billion as our estimate, then our body system would correspond to the scope of 700 galaxies the size of the Milky Way, one cell for one star… a single body!

[2] We know today that genes can change in different ways or be altered during various phases.

[3] Simplifying, we can say that ‘randomness’ in a ‘distribution-free form’ means that each of the known possible continuations is ‘equally likely’; none of the possible continuations shows a ‘higher frequency’ over time than any of the others. Randomness with an ‘implicit distribution’ is noticeable because, although the possible continuations are random, certain continuations show a different frequency over time than others. All these particular individual frequencies together reveal a ‘pattern’ by which they can be characterized. An example of ‘randomness with a distribution’ is the ‘natural (or Gaussian) distribution’.

[4] The concept of ‘memory’ is a theoretical notion, the empirical and functional description of which has not yet been fully completed. Literature distinguishes many different ‘forms of memory’ such as ‘short-term memory’, ‘long-term memory’, ‘sensory memory’, ‘episodic memory’, and many more.

[5] For example, ‘joint positioning’, ‘pain’, ‘feeling of fullness’, ‘discomfort’, ‘hunger’, ‘thirst’, various ’emotions’, etc.

[6] One then perceives not just a ‘blue sky’ and feels a ‘light breeze on the skin’ at the same time, but also experiences a sense of ‘malaise’, ‘fever’, perhaps even a ‘feeling of dejection’ and the like.

[7] In biological behavioral research, there are countless examples of life forms showing impressive coordination achievements, such as during collective hunting, organizing the upbringing of offspring, within the ‘family unit’, learning the ‘dialects’ of their respective languages, handling tools, etc.

[8] Every child can learn any known human language anywhere, provided there is an environment in which a language is practiced. Many thousands of different languages are known.

[9] The exact mechanism by which a language first arises among a group of people remains unclear to this day. However, there are increasingly more computer simulations of language learning (language evolution) that attempt to shed light on this. Given the enormous number of factors involved in real language use, these simulations still appear ‘rather simple’.

[10] As we know from many records, there were many other languages in previous times that eventually died out (‘dead languages’), and only written records of them exist. It is also interesting to consider cases in which a language has ‘evolved’, where older forms continue to exist in texts.

[11] Language research (many different disciplines work together here) suggests the working hypothesis that (i) the type and scope of sounds used in a language, due to the human speech apparatus, represent a finite set that is more or less similar across all languages, although there are still different sets of sounds between the major language families. Furthermore, (ii) many analyses of the structure of spoken and then written language suggest that there are ‘basic structures’ (‘syntax’) that can be found—with variations—in all languages.

[12] Anyone who comes into contact with people who speak a different language can experience this up close and concretely.

[13] In light of modern brain research and general physiology, the ‘location’ here would be assumed to be the brain. However, this location is of little use, as the research into the material brain in the body, along with its interactions with the surrounding body, has hardly been able to grasp the exact mechanisms of ‘meaning assignments’ (just as one cannot identify the algorithm being executed based on the physical signals of computer chips alone from those signals).

[14] For example, ‘hunger’, ‘thirst’, ‘toothache’…

[15] The ‘remembering’ of something that has happened before; the ‘recognition’ of something sensually concrete that one can ‘remember’; the ‘combining’ of memorable things into new ‘constellations’, and much more.

[16] Parts of this discussion can be found in the context of ‘text analyses’, ‘text interpretations’, ‘hermeneutics’, ‘Bible interpretation’, etc.

[17] Which is not surprising at all, since modern formal logic could only arise because it had programmatically radically departed from what has to do with everyday linguistic meaning. What was left were only ‘stubs of an abstract truth’ in the form of abstract ‘truth values’ that were devoid of any meaning.

[18] His posthumously published ‘Philosophical Investigations’ (1953) therefore offer ‘only’ a collection of individual insights, but these were influential enough to impact reflections on linguistic meaning worldwide.

[19] The list of publications titled around the ‘meaning of language’ is exceedingly long. However, this does not change the fact that none of these publications satisfactorily solve the problem comprehensively. It is currently not foreseeable how a solution could emerge, as this would require the cooperation of many disciplines, which in current university operations are well distributed and separated into ‘existences of their own’.

[20] With chatGPT as an example.

[21] Millions of texts produced by humans for the purpose of ‘communicating content’.

[22] Which ultimately is true, because the algorithms themselves do not ‘invent’ text, but use ‘actually used’ linguistic expressions from existing texts to generate ‘highly probable’ combinations of expressions that humans would likely use.

[23] These cannot be seen in isolation: without extremely powerful computing centers along with corresponding global networks and social structures that make widespread use possible, these algorithms would be worthless. Here, indirectly, what has become possible and functions in everyday life due to collective human intelligence also shines through.