eJournal: uffmm.org,

ISSN 2567-6458, 21.May 2019

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

Change: 26.May 2019 (Extending from two to three selected authors)

CONTEXT

This text is part of the larger text dealing with the Actor-Actor Interaction (AAI) paradigm.

OBJECTIVE

In the following text the focus is on the global environment for the AAI approach, the cooperation and/ or competition between societies and the strong impact induced by the new digital technologies. Numerous articles and books are dealing with these phenomena. For the actual focus I like to mention especially three books: (i) from the point of view of the technology driver the book of Eric Schmidt and Jared Cohen (2013) — both from google — seems to be an impressive illustration of what will be possible in the near future; (ii) from the point of a technology-aware historian the book of Yuval Noah Harari (2018) can help to deepen the impressions and pointing to the more and more difficult role of mankind itself; finally (iii) from the point of view of a society-thriller author Eric Elsberg (2019) who shows within a quite realistic scenario how a global lack of understanding can turn the countries world wide into a desaster which seems to be un-necessary.

The many, many different aspects of the views of the first two mentioned authors I transform into one confrontation only: Digital Slavery vs. Digital Empowerment.

DIGITAL SLAVERY

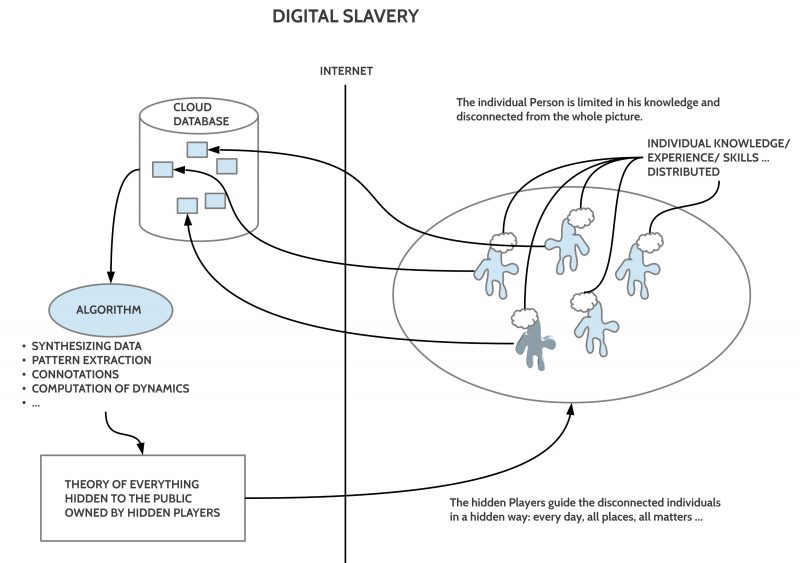

Stepping back from the stream of events in everyday life, and looking onto the more general structure working behind and implicit in all these events then one can recognize an incredible collecting behavior of only a few services behind the scene. While the individual user is mostly separated from all the others, empowered by a limited individual knowledge, individual experiences, skills, and preferences, mostly unconscious, his/ her behavior will be stored in central cloud spaces which as such are only single, individual data with a bigger importance. People asked about their data usually do not bother too much about questions of security. An often heared argument in this context says, that they have nothing to hide. They are only normal persons.

What they do not see and which they cannot see because this is completely hided for others is the fact that there exists hidden algorithms which can synthesize all these individual data, extracting different kinds of patterns, reconstructing time lines, adding divers connotations, and which can compute some dynamics pointing into a possible future. The hidden owners (the ‘dark lords’) of these storage spaces and algorithms can built up with these individual data of normal users overall pictures of many hundreds of Millions of users, companies, offices, communal institutions etc., which enable very specific plans and actions to exploit economical, political and military opportunities. With this knowledge they can destroy nearly any kind of company at will and they can introduce new companies before anybody elsewhere has the faintiest idea, that there is a new possibility. This pattern concentrates the capital more and more in a decreasing number of owners and turns more and more people into an anonymous mass of being poor.

The individual user does not know about all this. In his/ her Not-Knowing the user is mutating from a free democratic citizen to a formally perhaps still free, but materially completely manipulated something. This is not limited to the normal citizen but it holds for Managers, mayors and most kinds of politicians too. Traditional societies are sucked out and are turned into more and more zombie-states.

Is there no chance to overcome this destructive overall scenario?

DIGITAL EMPOWERMENT

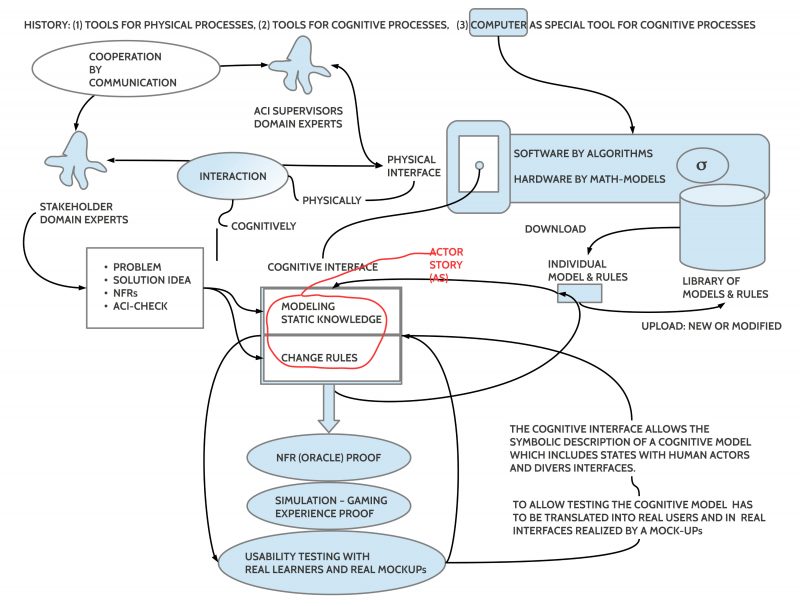

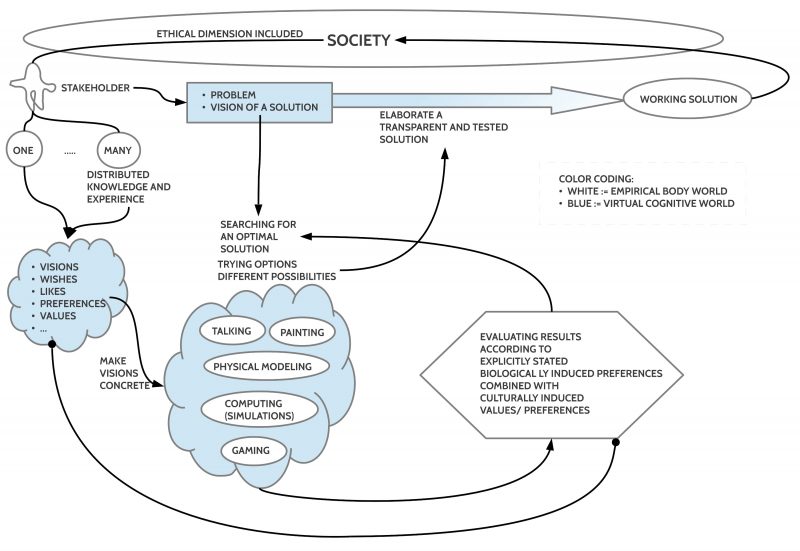

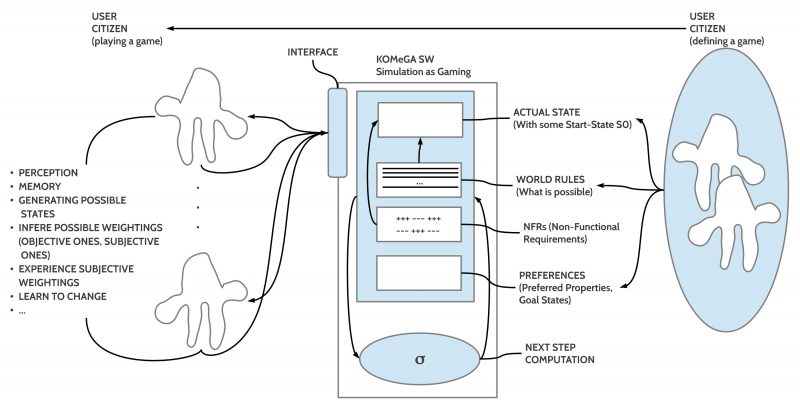

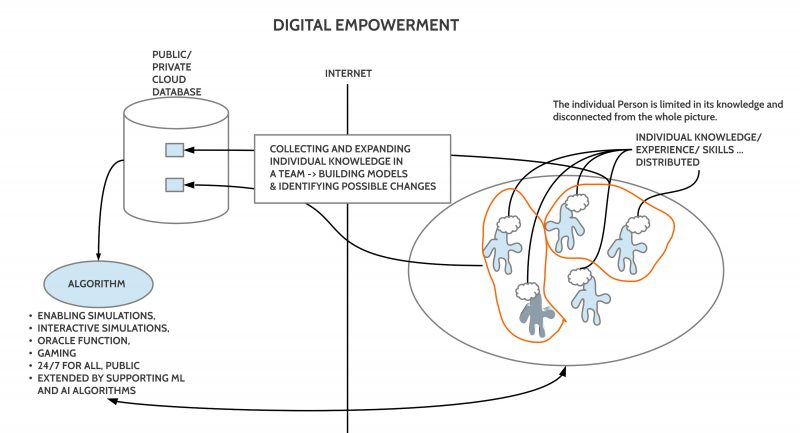

There are alternatives to the actual digital slavery paradigm. These alternatives try to help the individual user, citizen, manager, mayor etc. to bridge his/ her isolation by supporting a new kind of team-based modeling of the common reality, which is stored on public storage spaces, reachable 24 hours every day during a year by all. Here too one can add algorithms which can support the contributing users by simulations, playing modes, oracle-computations, connecting different models into one model, and much more. Such an approach frees the individual out of his individual enclosures, sharing creative ideas, searching together for better solutions, and using modeling techniques, simulation techniques, and several kinds of machine learning (ML) and artificial intelligence (AI) to improve the construction of possible futures much beyond the individual capacities alone.

This alternative approach allows real open knowledge and real informed democracies. This is not slavery by dark lords but common empowerment by free people.

Who has already read some of the texts related to the AAI paradigm will know that the AAI paradigm covers exactly this empowering view of a modern open democratic society.

COOPERATIVE SOCIETIES

At a first glance this idea of a digital empowered society may look as an empty procedure: everybody is enabled to communicate and think with others, but what is with the daily economy which organizes the stream of goods, services, and resources? The main mode of interactions in the beginning of the 21st century seems to demonstrate the inability of the actual open liberal societies to fight inequalities really. The political system appears to be too weak to enable efficient structures.

It is known since years based on mahematical models that a truly cooperative society is much, much more stable as any other kind of a system and even much, much more productive. These insights are not at work world wide. The reason is that the financial and political systems follow models in their heads which are different and which until now are opposing any kind of a change. Several cultural and emotional factors stand against different views, different practices, different procedures. Here improved communication and planning capabilites can be of help.

REFERENCES

- Marc Elsberg. Gier. Wie weit würdest Du gehen? Blanvalet Publisher (part of Random House), Munich, 2019

- Yuval Noah Harari. 21 Lessons for the 21st Century. Spiegel & Grau,

Penguin Random House, New York, 2018. - Eric Schmidt and Jared Cohen. The New Digital Age. Reshaping the

Future of People, Nations and Business. John Murray, London (UK),

1 edition, 2013. URL https://www.google.com/search?client=

ubuntu&channel=fs&q=eric+schmidt+the+new+digital+age+pdf&

ie=utf-8&oe=utf-8 .