eJournal: uffmm.org, ISSN 2567-6458, 17.March 2022 – 22.March 2022, 8:40

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

SCOPE

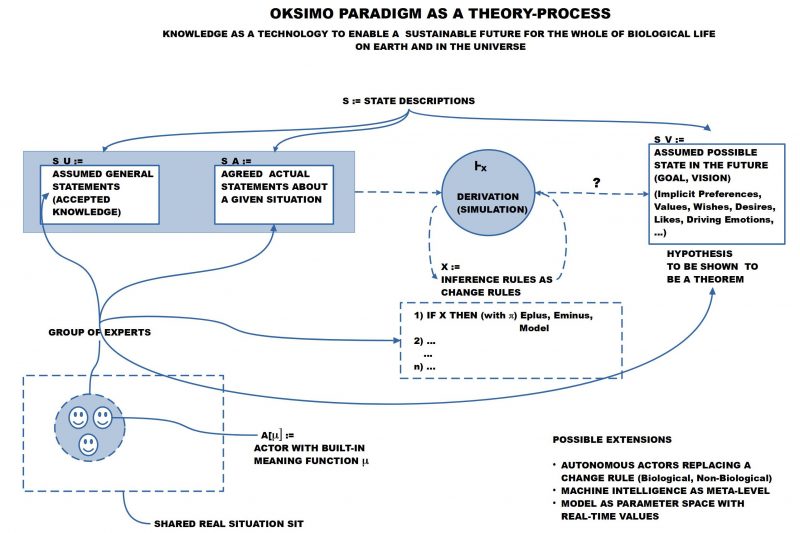

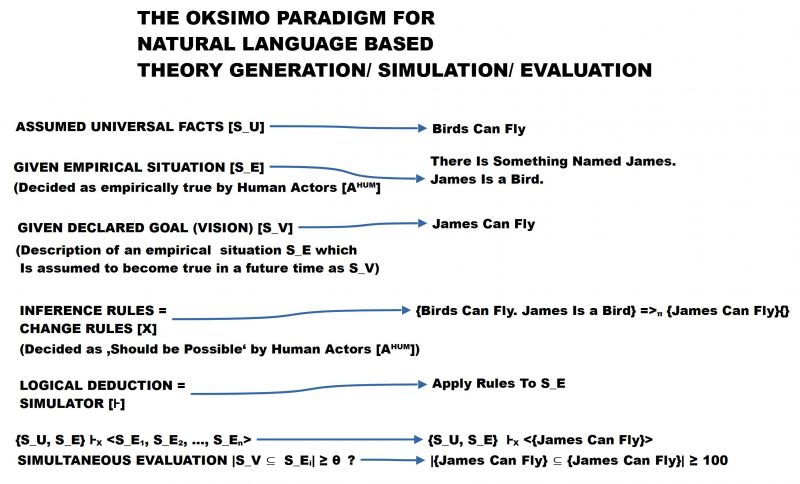

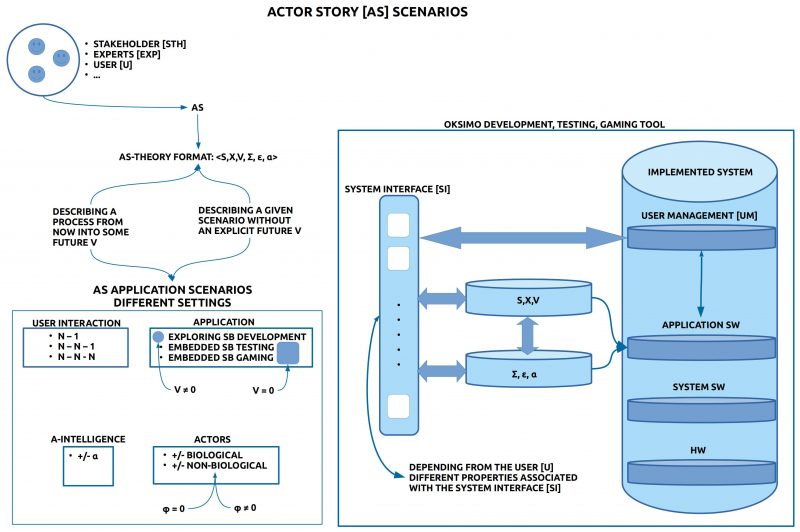

In the uffmm review section the different papers and books are discussed from the point of view of the oksimo paradigm. [1] In the following text the author discusses some aspects of the book “Collective Intelligence. mankind’s emerging world in cyberspace” by Pierre Lévy (translated by Robert Bonono),1997 (French: 1994)[2]

PREVIEW

Before starting a more complete review here a notice in advance.

Only these days I started reading this book of Pierre Lévy after working more than 4 years intensively with the problem of an open knowledge space for everybody as genuine part of the cyberspace. I have approached the problem from several disciplines culminating in a new theory concept which has additionally a direct manifestation in a new kind of software too. While I am now are just testing version 2 of this software and having in parallel worked through several papers of the early, the middle, and the late Karl Popper [3], I detected this book of Lévy [*] and was completely impressed by the preface of this book. His view of mankind and cyberspace is intellectual deep and a real piece of art. I had the feeling that this text could be without compromise a direct preview of our software paradigm although I didn’t know about him before.

Looking to know more about him I detected some more interesting books but especially also his blog intlekt – metadata [4], where he develops his vision of a new language for a new ‘collective intelligence’ being practiced in the cyberspace. While his ideas about ‘collective intelligence’ associated with the ‘cyberspace’ are fascinating, it appears to me that his ideas about a new language are strongly embedded in ‘classical’ concepts of language, semiotics, and computer, concepts which — in my view — are not sufficient for a new language enabling collective intelligence.

Thus it can become an exciting reading with continuous reflections about the conditions about ‘collective intelligence’ and the ‘role of language’ within this.

Chapter 1: Introduction

Position lévy

The following description of the position of Lévy described in his 1st chapter is clearly an ‘interpretation’ from the ‘viewpoint’ of the writer at this time. This is more or less ‘inevitable’. [5]

A good starting point for the project of ‘understanding the book’ seems to be the historical outline which Lévy gives on the pages 5-10. Starting with the appearance of the homo sapiens he characterizes different periods of time with different cultural patterns triggered by the homo sapiens. In the last period, which is still lasting, knowledge takes radical new ‘forms’; one central feature is the appearance of the ‘cyberspace’.

Primarily the cyberspace is ‘machine-based’, some material structure, enhanced with a certain type of dynamics enabled by algorithms working in the machine. But as part of the cultural life of the homo sapiens the cyberspace is also a cultural reality increasingly interacting directly with individuals, groups, institutions, companies, industry, nature, and even more. And in this space enabled by interactions the homo sapiens does not only encounter with technical entities alone, but also with effects/ events/ artifacts produced by other homo sapiens companions.

Lévy calls this a “re-creation of the social bond based on reciprocal apprenticeship, shared skills, imagination, and collective intelligence.” (p.10) And he adds as a supplement that “collective intelligence is not a purely cognitive object.” (p.10)

Looking into the future Lévy assumes two main axes: “The renewal of the social bond through our relation to knowledge and collective intelligence itself.” (p.11)

Important seems to be that ‘knowledge’ is also not be confined to ‘facts alone’ but it ‘lives’ in the reziproke interactions of human actors and thereby knowledge is a dynamic process.(cf. p.11) Humans as part of such knowledge processes receive their ‘identities’ from this flow. (cf. p.12) One consequence from this is “… the other remains enigmatic, becomes a desirable being in every respect.”(p.12) With some further comment: “No one knows everything, everyone knows something, all knowledge resides in humanity. There is no transcendent store of knowledge and knowledge is simply the sum of what we know.”(p.13f)

‘Collective intelligence’ dwells nearby to dynamic knowledge: “The basis and goal of collective intelligence is the mutual recognition and enrichment of individuals rather than the cult of fetishized or hypostatized communities.”(p.13) Thus Lévy can state that collective intelligence “is born with culture and growth with it.”(p.16) And making it more concrete with a direct embedding in a community: “In an intelligent community the specific objective is to permanently negotiate the order of things, language, the role of the individual, the identification and definition of objects, the reinterpretation of memory. Nothing is fixed.”(p.17)

These different aspects are accumulating in the vision of “a new humanism that incorporates and enlarges the scope of self knowledge into a form of group knowledge and collective thought. … [the] process of collective intelligence [is] leading to the creation of a distinct sense of community.”(p.17)

One side effect of such a new humanism could be “new forms of democracy, better suited to the complexity of contemporary problems…”.(p.18)

First COMMENTS

At this point I will give only some few comments, waiting with more general and final thoughts until the end of the reading of the whole text.

Shortened Timeline – Wrong Picture

The timeline which Lévy is using is helpful, but this timeline is ‘incomplete’. What is missing is the whole time ‘before’ the advent of the homo sapiens within the biological evolution. And this ‘absence’ hides the understanding of one, if not ‘the’, most important concept of all life, including the homo sapiens and its cultural process.

This central concept is today called ‘sustainable development’. It points to a ‘dynamical structure’, which is capable of ‘adapting to an ever changing environment’. Life on the planet earth is only possible from the very beginning on account of this fundamental capability starting with the first cells and being kept strongly alive through all the 3.5 Billion years (10^9) in all the following fascinating developments.

This capability to be able to ‘adapt to an ever changing environment’ implies the ability to change the ‘working structure, the body’ in a way, that the structure can change to respond in new ways, if the environment changes. Such a change has two sides: (i) the real ‘production’ of the working structures of a living system, and (ii) the ‘knowledge’, which is necessary to ‘inform’ the processes of formation and keeping an organism ‘in action’. And these basic mechanisms have additionally (iii) to be ‘distributed in a whole population’, whose sheer number gives enough redundancy to compensate for ‘wrong proposals’.

Knowing this the appearance of the homo sapiens life form manifests a qualitative shift in the structure of the adaption so far: surely prepared by several Millions of years the body of the homo sapiens with an unusual brain enabled new forms of ‘understanding the world’ in close connection with new forms of ‘communication’ and ‘cooperation’. With the homo sapiens the brains became capable to talk — mediated by their body and the surrounding body world — with other brains hidden in other bodies in a way, which enabled the sharing of ‘meaning’ rooted in the body world as well in the own body. This capability created by communication a ‘network of distributed knowledge’ encoded in the shared meaning of individual meaning functions. As long as communication with a certain meaning function with the shared meanings ‘works’, as long does this distributed knowledge’ exist. If the shared meaning weakens or breaks down this distributed knowledge is ‘gone’.

Thus, a homo sapiens population has not to wait for another generation until new varieties of their body structures could show up and compete with the changing environment. A homo sapiens population has the capability to perceive the environment — and itself — in a way, that allows additionally new forms of ‘transformations of the perceptions’ in a way, that ‘cognitive varieties of perceived environments’ can be ‘internally produced’ and being ‘communicated’ and being used for ‘sequences of coordinated actions’ which can change the environment and the homo sapiens them self.

The cultural history then shows — as Lévy has outlined shortly on his pages 5-10 — that the homo sapiens population (distributed in many competing smaller sub-populations) ‘invented’ more and more ‘behavior pattern’, ‘social rules’ and a rich ‘diversity of tools’ to improve communication and to improve the representation and processing of knowledge, which in turn helped for even more complex ‘sequences of coordinated actions’.

Sustainability & Collective Intelligence

Although until today there are no commonly accepted definitions of ‘intelligence’ and of ‘knowledge’ available [6], it makes some sense to locate ‘knowledge’ and ‘intelligence’ in this ‘communication based space of mutual coordinated actions’. And this embedding implies to think about knowledge and intelligence as a property of a population, which ‘collectively’ is learning, is understanding, is planning, is modifying its environment as well as them self.

And having this distributed capability a population has all the basics to enable a ‘sustainable development’.

Therefore the capability for a sustainable development is an emergent capability based on the processes enabled by a distributed knowledge enabled by a collective intelligence.

Having sketched out this then all the wonderful statements of Lévy seem to be ‘true’ in that they describe a dynamic reality which is provided by biological life as such.

A truly Open Space with Real Boundaries

Looking from the outside onto this biological mystery of sustainable processes based on collective intelligence using distributed knowledge one can identify incredible spaces of possible continuations. In principle these spaces are ‘open spaces’.

Looking to the details of this machinery — because we are ‘part of it’ — we know by historical and everyday experience that these processes can fail every minute, even every second.

To ‘improve’ a given situation one needs (i) not only a criterion which enables a judgment about something to be classified as being ‘not good’ (e.g. the given situation), one needs further (ii) some ‘minimal vision’ of a ‘different situation’, which can be classified by a criterion as being ‘better’. And, finally, one needs (iii) a minimal ‘knowledge’ about possible ‘actions’ which can change the given situation in successive steps to transform it into the envisioned ‘new better situation’ functioning as a ‘goal’.

Looking around, looking back, everybody has surely experiences from everyday life that these three tasks are far from being trivial. To judge something to be ‘not good’ or ‘not good enough’ presupposes a minimum of ‘knowledge’ which should be sufficiently evenly be ‘distributed’ in the ‘brains of all participants’. Without a sufficient agreement no common judgment will be possible. At the time of this writing it seems that there is plenty of knowledge around, but it is not working as a coherent knowledge space accepted by all participants. Knowledge battles against knowledge. The same is effective for the tasks (ii) and (iii).

There are many reasons why it is no working. While especially the ‘big challenges’ are of ‘global nature’ and are following a certain time schedule there is not too much time available to ‘synchronize’ the necessary knowledge between all. Mankind has until now supportet predominantly the sheer amount of knowledge and ‘individual specialized solutions’, but did miss the challenge to develop at the same time new and better ‘common processes’ of ‘shared knowledge’. The invention of computer, networks of computer, and then the multi-faceted cyberspace is a great and important invention, but is not really helpful as long as the cyberspace has not become a ‘genuin human-like’ tool for ‘distributed human knowledge’ and ‘distributed collective human-machine intelligence’.

Truth

One of the most important challenges for all kinds of knowledge is the ability to enable a ‘knowledge inspired view’ of the environment — including the actor — which is ‘in agreement with the reality of the environment’; otherwise the actions will not be able to support life in the long run. [7] Such an ‘agreement’ is a challenge, especially if the ‘real processes’ are ‘complex’ , ‘distributed’ and are happening in ‘large time frames’. As all human societies today demonstrate, this fundamental ability to use ’empirically valid knowledge’ is partially well developed, but in many other cases it seems to be nearly not in existence. There is a strong — inborn ! — tendency of human persons to think that the ‘pictures in their heads’ represent ‘automatically’ such a knowledge what is in agreement with the real world. It isn’t. Thus ‘dreams’ are ruling the everyday world of societies. And the proportion of brains with such ‘dreams’ seems to grow. In a certain sense this is a kind of ‘illness’: invisible, but strongly effective and highly infectious. Science alone seems to be not a sufficient remedy, but it is a substantial condition for a remedy.

COMMENTS

[*] The decisive hint for this book came from Athene Sorokowsky, who is member of my research group.

[1] Gerd Doeben-Henisch,The general idea of the oksimo paradigm: https://www.uffmm.org/2022/01/24/newsletter/, January 2022

[2] Pierre Lévy in wkp-en: https://en.wikipedia.org/wiki/Pierre_L%C3%A9vy

[3] Karl Popper in wkp-en: https://en.wikipedia.org/wiki/Karl_Popper. One of the papers I have written commenting on Popper can be found HERE.

[4] Pierre Lévy, intlekt – metadata, see: https://intlekt.io/blog/

[5] Who wants to know, what Lévy ‘really’ has written has to go back to the text of Lévy directly. … then the reader will read the text of Lévy with ‘his own point of view’ … indeed, even then the reader will not know with certainty, whether he did really understand Lévy ‘right’. … reading a text is always a ‘dialogue’ .. .

[6] Not in Philosophie, not in the so-called ‘Humanities’, not in the Social Sciences, not in the Empirical Sciences, and not in Computer Science!

[7] The ‘long run’ can be very short if you misjudge in the traffic a situation, or a medical doctor makes a mistake or a nuclear reactor has the wrong sensors or ….

Continuation

See HERE.