eJournal: uffmm.org, ISSN 2567-6458, Aug 16 -Aug 18, 2021

Email: info@uffmm.org

Author: Gerd Doeben-Henisch

Email: gerd@doeben-henisch.de

SCOPE

In the uffmm review section the different papers and books are discussed from the point of view of the oksimo paradigm. [2] Here the author reads the book “Logic. The Theory Of Inquiry” by John Dewey, 1938. [1]

PREFACE DEWEY 1938/9

If one looks to the time span between Dewey’s first published book from 1887 (Psychology) until 1938 (Logic) we have 51 years of experience. Thus this book about logic can be seen as a book digesting a manifold knowledge from a very special point of view: from Logic as a theory of inquiry.

And because Dewey is qualified as one of the “primary figures associated with the philosophy of pragmatism” [3] it is of no surprise that he in his preface to the book ‘Logic …’ [1] mentions not only as one interest the ” … interpretation of the forms and formal relations that constitute the standard material of logical tradition”(cf. p.1), but within this perspective he underlines the attention particularly to “… the principle of the continuum of inquiry”(cf. p.1).

If one sees like Dewey the “basic conception of inquiry” as the “determination of an indeterminate situation” (cf. p.1) then the implicit relations can enable “a coherent account of the different propositional forms to be given”. This provides a theoretical interface to logical thinking as thinking in inferences as well as an philosophical interface to pragmatism as a kind of inquiry which sees strong relations between the triggering assumptions and the possible consequences created by agreed procedures leading from the given and expected to the final consequences.

Dewey himself is very skeptical about the term ‘Pragmatism’, because

“… the word lends itself [perhaps] to misconception”, thus “that it seemed advisable to avoid its use.” (cf. p.2) But Dewey does not stay with a simple avoidance; he gives a “proper interpretation” of the term ‘pragmatic’ in the way that “the function of consequences” can be interpreted as “necessary tests of the validity of propositions, provided these consequences are operationally instituted and are such as to resolve the specific problem evoking the operations.”(cf. p.2)

Thus Dewey assumes the following elements of a pragmatic minded process of inquiry:

- A pragmatic inquiry is a process leading to some consequences.

- These consequences can be seen as tests of the validity of propositions.

- As a necessary condition that a consequence can be qualified as a test of assumed propositions one has to assume that “these consequences are operationally instituted and are such as to resolve the specific problem”.

- That consequences, which are different to the assumed propositions [represented by some expressions] can be qualified as confirming an assumed validity of the assumed propositions, requires that the assumed validity can be represented as an expectation of possible outcomes which are observably decidable.

In other words: some researchers are assuming that some propositions represented by some expressions are valid, because they are convinced about this by their commonly shared observations of the propositions. They associate these assumed propositions with an expected outcome represented by some expressions which can be interpreted by the researchers in a way, that they are able to decide whether an upcoming situation can be judged as that situation which is expected as a valid outcome (= consequence). Then there must exist some agreed procedures (operationally instituted) whose application to the given starting situation produces the expected outcome (=consequences). Then the whole process of a start situation with an given expectation as well as given procedures can generate a sequence of situations following one another with an expected outcome after finitely many situation.

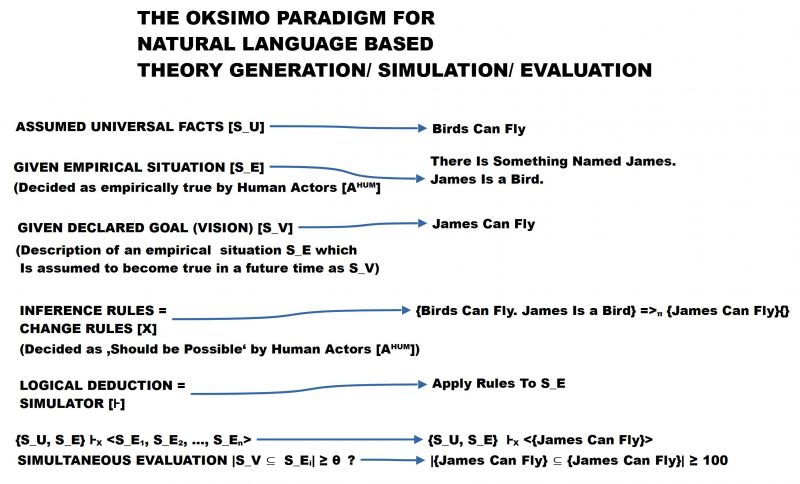

If one interprets these agreed procedures as inference rules and the assumed expressions as assumptions and expectations then the whole figure can be embedded in the usual pattern of inferential logic, but with some strong extensions.

Dewey is quite optimistic about the conformity of this pragmatic view of an inquiry and a view of logic: “I am convinced that acceptance of the general principles set forth will enable a more complete and consistent set of symbolizations than now exists to be made.”(cf. p.2) But he points to one aspect, which would be necessary for a pragmatically inspired view of logic which is in ‘normal logic’ not yet realized: “the need for development of a general theory of language in which form and matter are not separated.” This is a very strong point because the architecture of modern logic is fundamentally depending on the complete abandonment of meaning of language; the only remaining shadow of meaning resides in the assumptions of the property of being ‘true’ or ‘false’ related to expressions (not propositions!). To re-introduce ‘meaning’ into logic by the usage of ‘normal language’ would be a complete rewriting of the whole of modern logic.

At the time of writing these lines by Dewey 1938 there was not the faintest idea in logic how such a rewriting of the whole logic could look like.

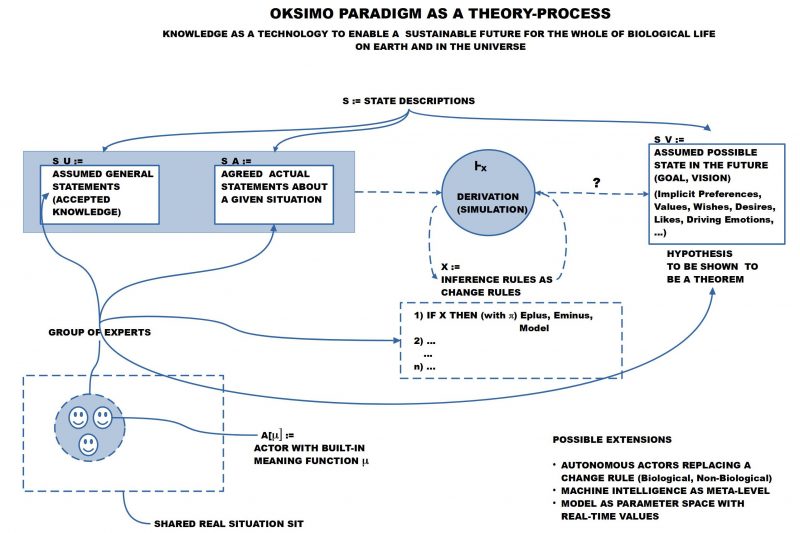

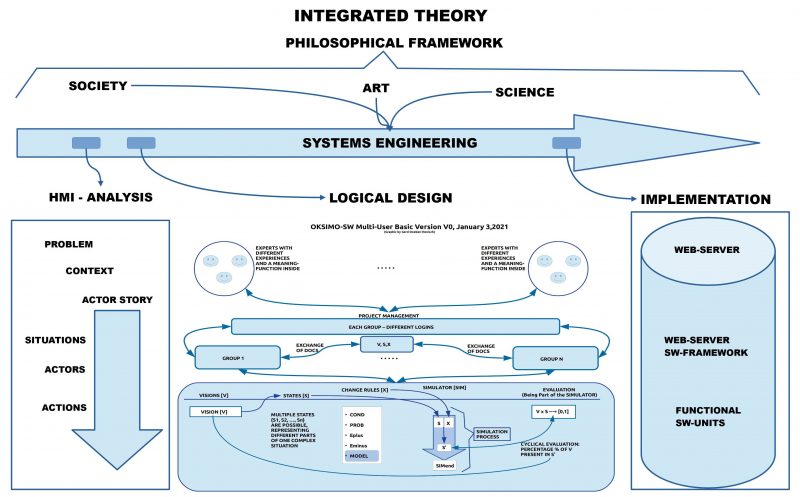

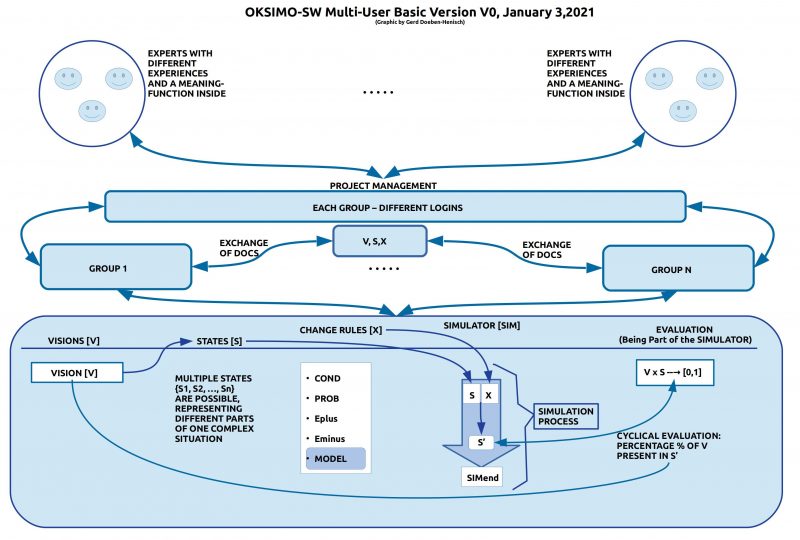

With the new oksimo paradigm there could perhaps exist a slight chance to do it. Why? Here are the main arguments:

- The oksimo paradigm assumes an inference process leading from some assumed starting situation to some consequences generated by the application of some agreed change-rules.

- All situations are assumed to have a twofold nature: (i) primarily they are given as expressions of some language (it can be a normal language!); (ii) secondarily these expressions are part of the used normal language, where every researches is assumed to have a ‘built-in’ meaning function which has during his/her individual learning collected enough ‘meaning’, which allows a symbolically enabled cooperation with other researchers.

- Every researcher can judge every time whether a given or inferred situation is in agreement with his interpretation of the expressions and their relation to the given or considered possible situation.

- If the researchers assume in the beginning additionally an expectation (goal/ vision) of a possible outcome (possible consequence), then it is possible at every point of the sequence to judge to which degree the actual situation corresponds to the expected situation.

The second requirement of Dewey for the usage of logic for a pragmatic inquiry was given in the statement “that an adequate set of symbols depends upon prior institution of valid ideas of the conceptions and relations that are symbolized.”(cf. p.2)

Thus not only the usage of normal language is required but also some presupposed knowledge. Within the oksimo paradigm it is possible to assume as much presupposed knowledge as needed.

RESULTS SO FAR

After reading the preface to the book it seems that the pragmatic view of inquiry combined with some idea of modern logic can directly be realized within the oksimo paradigm.

The following posts will show whether this is a good working hypothesis or not.

COMMENTS

[1] John Dewey, Logic. The Theory Of Inquiry, New York, Henry Holt and Company, 1938 (see: https://archive.org/details/JohnDeweyLogicTheTheoryOfInquiry with several formats; I am using the kindle (= mobi) format: https://archive.org/download/JohnDeweyLogicTheTheoryOfInquiry/%5BJohn_Dewey%5D_Logic_-_The_Theory_of_Inquiry.mobi . This is for the direct work with a text very convenient. Additionally I am using a free reader ‘foliate’ under ubuntu 20.04: https://github.com/johnfactotum/foliate/releases/). The page numbers in the text of the review — like (p.13) — are the page numbers of the ebook as indicated in the ebook-reader foliate.(There exists no kindle-version for linux (although amazon couldn’t work without linux servers!))

[2] Gerd Doeben-Henisch, 2021, uffmm.org, THE OKSIMO PARADIGM

An Introduction (Version 2), https://www.uffmm.org/wp-content/uploads/2021/03/oksimo-v1-part1-v2.pdf

[3] John Dewey, Wikipedia [EN]: https://en.wikipedia.org/wiki/John_Dewey

Continuation

See part 2 HERE.

MEDIA

Here some spontaneous recording of the author, talking ‘unplugged’ into a microphone how he would describe the content of the text above in a few words. It’s not perfect, but it’s ‘real’: we all are real persons not being perfect, but we have to fight for ‘truth’ and a better life while being ‘imperfect’ …. take it as ‘fun’ 🙂